Series

Work with NEON OS & IS Data - Plant Phenology & Temperature

This series covers how to import, manipulate, format and plot time series data stored in .csv format in R. We will work with temperature (NEON.DP1.00002) and plant phenology (NEON.DP1.10055) data to explore working with and visualizing data with different time scale intervals.

To find each tutorial, select from the left menu to continue after this introduction.

Learning Objectives

After completing the series, you will be able to:

- work with data.frames in R (dplyr package),

- convert timestamps stored as text strings to R date or datetime (e.g. POSIX) classes (lubridate package),

- aggregate data across different time scales (day vs month) and

- plot time series data (ggplot2 package).

Things You’ll Need To Complete This Series

Setup RStudio

To complete the tutorial series you will need an updated version of R and, preferably, RStudio installed on your computer.

R

is a programming language that specializes in statistical computing. It is a

powerful tool for exploratory data analysis. To interact with R, we strongly

recommend

RStudio,

an interactive development environment (IDE).

Install R Packages

You can chose to install packages with each lesson or you can download all of the necessary R packages now.

-

dplyr:

install.packages("dplyr") -

ggplot2:

install.packages("ggplot2") -

lubridate:

install.packages("lubridate") -

scales:

install.packages("scales") -

tidyr:

install.packages("tidyr") -

gridExtra:

install.packages("gridExtra")

More on Packages in R – Adapted from Software Carpentry.

Work With NEON's Plant Phenology Data

Authors: Megan A. Jones, Natalie Robinson, Lee Stanish

Last Updated: Apr 10, 2025

Many organisms, including plants, show patterns of change across seasons - the different stages of this observable change are called phenophases. In this tutorial we explore how to work with NEON plant phenophase data.

Objectives

After completing this activity, you will be able to:

- work with NEON Plant Phenology Observation data.

- use dplyr functions to filter data.

- plot time series data in a bar plot using ggplot the function.

Things You’ll Need To Complete This Tutorial

You will need the most current version of R and, preferably, RStudio loaded

on your computer to complete this tutorial.

Install R Packages

-

neonUtilities:

install.packages("neonUtilities") -

ggplot2:

install.packages("ggplot2") -

dplyr:

install.packages("dplyr")

More on Packages in R – Adapted from Software Carpentry.

Download Data

This tutorial is designed to have you download data directly from the NEON

portal API using the neonUtilities package. However, you can also directly

download this data, prepackaged, from FigShare. This data set includes all the

files needed for the Work with NEON OS & IS Data - Plant Phenology & Temperature

tutorial series. The data are in the format you would receive if downloading them

using the zipsByProduct() function in the neonUtilities package.

Additional Resources

- NEON data portal

- NEON Plant Phenology Observations data product user guide

- RStudio's data wrangling (dplyr/tidyr) cheatsheet

- NEONScience GitHub Organization

- nneo API wrapper on CRAN

Plants change throughout the year - these are phenophases. Why do they change?

Explore Phenology Data

The following sections provide a brief overview of the NEON plant phenology observation data. When designing a research project using this data, you need to consult the documents associated with this data product and not rely solely on this summary.

The following description of the NEON Plant Phenology Observation data is modified from the data product user guide.

NEON Plant Phenology Observation Data

NEON collects plant phenology data and provides it as NEON data product DP1.10055.001.

The plant phenology observations data product provides in-situ observations of the phenological status and intensity of tagged plants (or patches) during discrete observations events.

Sampling occurs at all terrestrial field sites at site and season specific intervals. During Phase I (dominant species) sampling (pre-2021), three species with 30 individuals each are sampled. In 2021, Phase II (community) sampling will begin, with <=20 species with 5 or more individuals sampled will occur.

Status-based Monitoring

NEON employs status-based monitoring, in which the phenological condition of an individual is reported any time that individual is observed. At every observations bout, records are generated for every phenophase that is occurring and for every phenophase not occurring. With this approach, events (such as leaf emergence in Mediterranean zones, or flowering in many desert species) that may occur multiple times during a single year, can be captured. Continuous reporting of phenophase status enables quantification of the duration of phenophases rather than just their date of onset while allows enabling the explicit quantification of uncertainty in phenophase transition dates that are introduced by monitoring in discrete temporal bouts.

Specific products derived from this sampling include the observed phenophase status (whether or not a phenophase is occurring) and the intensity of phenophases for individuals in which phenophase status = ‘yes’. Phenophases reported are derived from the USA National Phenology Network (USA-NPN) categories. The number of phenophases observed varies by growth form and ranges from 1 phenophase (cactus) to 7 phenophases (semi-evergreen broadleaf). In this tutorial we will focus only on the state of the phenophase, not the phenophase intensity data.

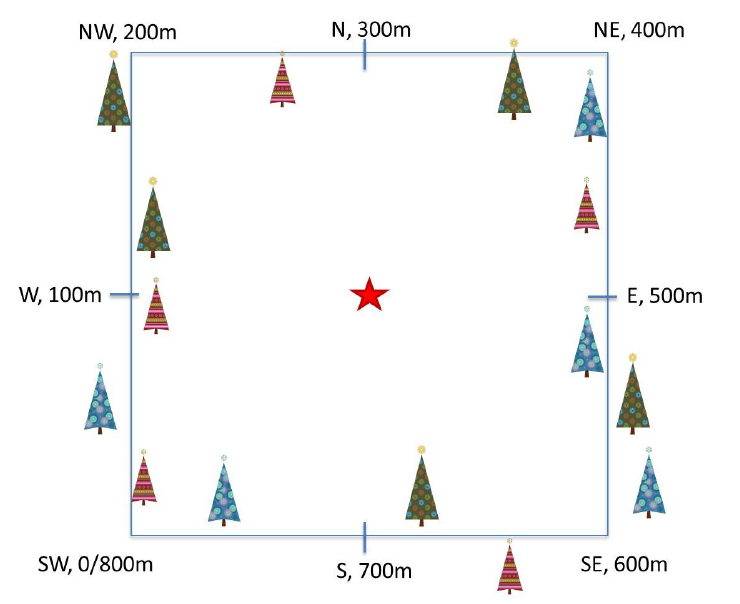

Phenology Transects

Plant phenology observations occurs at all terrestrial NEON sites along an 800 meter square loop transect (primary) and within a 200 m x 200 m plot located within view of a canopy level, tower-mounted, phenology camera.

Timing of Observations

At each site, there are:

- ~50 observation bouts per year.

- no more that 100 sampling points per phenology transect.

- no more than 9 sampling points per phenocam plot.

- 1 annual measurement per year to collect annual size and disease status measurements from each sampling point.

Available Data Tables

In the downloaded data packet, data are available in two main files

- phe_statusintensity: Plant phenophase status and intensity data

- phe_perindividual: Geolocation and taxonomic identification for phenology plants

- phe_perindividualperyear: recorded once a year, essentially the "metadata" about the plant: DBH, height, etc.

There are other files in each download including a readme with information on the data product and the download; a variables file that defines the term descriptions, data types, and units; a validation file with data entry validation and parsing rules; and an XML with machine readable metadata.

Stack NEON Data

NEON data are delivered in a site and year-month format. When you download data, you will get a single zipped file containing a directory for each month and site that you've requested data for. Dealing with these separate tables from even one or two sites over a 12 month period can be a bit overwhelming. Luckily NEON provides an R package neonUtilities that takes the unzipped downloaded file and joining the data files. The teaching data downloaded with this tutorial is already stacked. If you are working with other NEON data, please go through the tutorial to stack the data in R or in Python and then return to this tutorial.

Work with NEON Data

When we do this for phenology data we get three files, one for each data table, with all the data from your site and date range of interest.

First, we need to set up our R environment.

# install needed package (only uncomment & run if not already installed)

#install.packages("neonUtilities")

#install.packages("dplyr")

#install.packages("ggplot2")

# load needed packages

library(neonUtilities)

library(dplyr)

library(ggplot2)

options(stringsAsFactors=F) #keep strings as character type not factors

# set working directory to ensure R can find the file we wish to import and where

# we want to save our files. Be sure to move the download into your working directory!

wd <- "~/Git/data/" # Change this to match your local environment

setwd(wd)

Let's start by loading our data of interest. For this series, we'll work with date from the NEON Domain 02 sites:

- Blandy Farm (BLAN)

- Smithsonian Conservation Biology Institute (SCBI)

- Smithsonian Environmental Research Center (SERC)

And we'll use data from January 2017 to December 2019. This downloads over 9MB of data. If this is too large, use a smaller date range. If you opt to do this, your figures and some output may look different later in the tutorial.

With this information, we can download our data using the neonUtilities package.

If you are not using a NEON token to download your data, remove the

token = Sys.getenv(NEON_TOKEN) line of code (learn more about NEON API tokens

in the

Using an API Token when Accessing NEON Data with neonUtilities tutorial).

If you are using the data downloaded at the start of the tutorial, use the commented out code in the second half of this code chunk.

## Two options for accessing data - programmatic or from the example dataset

# Read data from data portal

phe <- loadByProduct(dpID = "DP1.10055.001", site=c("BLAN","SCBI","SERC"),

startdate = "2017-01", enddate="2019-12",

token = Sys.getenv("NEON_TOKEN"),

check.size = F)

## API token was not recognized. Public rate limit applied.

## Finding available files

##

|

| | 0%

|

|= | 1%

|

|= | 2%

|

|== | 3%

|

|=== | 4%

|

|=== | 5%

|

|==== | 6%

|

|==== | 7%

|

|===== | 8%

|

|====== | 9%

|

|====== | 11%

|

|======= | 12%

|

|======== | 13%

|

|======== | 14%

|

|========= | 15%

|

|========== | 16%

|

|========== | 17%

|

|=========== | 18%

|

|============ | 19%

|

|============ | 20%

|

|============= | 21%

|

|============= | 22%

|

|============== | 23%

|

|=============== | 24%

|

|=============== | 25%

|

|================ | 26%

|

|================= | 27%

|

|================= | 28%

|

|================== | 29%

|

|=================== | 31%

|

|=================== | 32%

|

|==================== | 33%

|

|===================== | 34%

|

|===================== | 35%

|

|====================== | 36%

|

|====================== | 37%

|

|======================= | 38%

|

|======================== | 39%

|

|======================== | 40%

|

|========================= | 41%

|

|========================== | 42%

|

|========================== | 43%

|

|=========================== | 44%

|

|============================ | 45%

|

|============================ | 46%

|

|============================= | 47%

|

|============================== | 48%

|

|============================== | 49%

|

|=============================== | 51%

|

|=============================== | 52%

|

|================================ | 53%

|

|================================= | 54%

|

|================================= | 55%

|

|================================== | 56%

|

|=================================== | 57%

|

|=================================== | 58%

|

|==================================== | 59%

|

|===================================== | 60%

|

|===================================== | 61%

|

|====================================== | 62%

|

|======================================= | 63%

|

|======================================= | 64%

|

|======================================== | 65%

|

|======================================== | 66%

|

|========================================= | 67%

|

|========================================== | 68%

|

|========================================== | 69%

|

|=========================================== | 71%

|

|============================================ | 72%

|

|============================================ | 73%

|

|============================================= | 74%

|

|============================================== | 75%

|

|============================================== | 76%

|

|=============================================== | 77%

|

|================================================ | 78%

|

|================================================ | 79%

|

|================================================= | 80%

|

|================================================= | 81%

|

|================================================== | 82%

|

|=================================================== | 83%

|

|=================================================== | 84%

|

|==================================================== | 85%

|

|===================================================== | 86%

|

|===================================================== | 87%

|

|====================================================== | 88%

|

|======================================================= | 89%

|

|======================================================= | 91%

|

|======================================================== | 92%

|

|========================================================= | 93%

|

|========================================================= | 94%

|

|========================================================== | 95%

|

|========================================================== | 96%

|

|=========================================================== | 97%

|

|============================================================ | 98%

|

|============================================================ | 99%

|

|=============================================================| 100%

##

## Downloading files totaling approximately 7.985319 MB

## Downloading 95 files

##

|

| | 0%

|

|= | 1%

|

|= | 2%

|

|== | 3%

|

|=== | 4%

|

|=== | 5%

|

|==== | 6%

|

|===== | 7%

|

|===== | 9%

|

|====== | 10%

|

|====== | 11%

|

|======= | 12%

|

|======== | 13%

|

|======== | 14%

|

|========= | 15%

|

|========== | 16%

|

|========== | 17%

|

|=========== | 18%

|

|============ | 19%

|

|============ | 20%

|

|============= | 21%

|

|============== | 22%

|

|============== | 23%

|

|=============== | 24%

|

|================ | 26%

|

|================ | 27%

|

|================= | 28%

|

|================== | 29%

|

|================== | 30%

|

|=================== | 31%

|

|=================== | 32%

|

|==================== | 33%

|

|===================== | 34%

|

|===================== | 35%

|

|====================== | 36%

|

|======================= | 37%

|

|======================= | 38%

|

|======================== | 39%

|

|========================= | 40%

|

|========================= | 41%

|

|========================== | 43%

|

|=========================== | 44%

|

|=========================== | 45%

|

|============================ | 46%

|

|============================= | 47%

|

|============================= | 48%

|

|============================== | 49%

|

|============================== | 50%

|

|=============================== | 51%

|

|================================ | 52%

|

|================================ | 53%

|

|================================= | 54%

|

|================================== | 55%

|

|================================== | 56%

|

|=================================== | 57%

|

|==================================== | 59%

|

|==================================== | 60%

|

|===================================== | 61%

|

|====================================== | 62%

|

|====================================== | 63%

|

|======================================= | 64%

|

|======================================== | 65%

|

|======================================== | 66%

|

|========================================= | 67%

|

|========================================== | 68%

|

|========================================== | 69%

|

|=========================================== | 70%

|

|=========================================== | 71%

|

|============================================ | 72%

|

|============================================= | 73%

|

|============================================= | 74%

|

|============================================== | 76%

|

|=============================================== | 77%

|

|=============================================== | 78%

|

|================================================ | 79%

|

|================================================= | 80%

|

|================================================= | 81%

|

|================================================== | 82%

|

|=================================================== | 83%

|

|=================================================== | 84%

|

|==================================================== | 85%

|

|===================================================== | 86%

|

|===================================================== | 87%

|

|====================================================== | 88%

|

|======================================================= | 89%

|

|======================================================= | 90%

|

|======================================================== | 91%

|

|======================================================== | 93%

|

|========================================================= | 94%

|

|========================================================== | 95%

|

|========================================================== | 96%

|

|=========================================================== | 97%

|

|============================================================ | 98%

|

|============================================================ | 99%

|

|=============================================================| 100%

##

## Unpacking zip files using 1 cores.

## Stacking operation across a single core.

## Stacking table phe_perindividual

## Stacking table phe_statusintensity

## Stacking table phe_perindividualperyear

## Copied the most recent publication of validation file to /stackedFiles

## Copied the most recent publication of categoricalCodes file to /stackedFiles

## Copied the most recent publication of variable definition file to /stackedFiles

## Finished: Stacked 3 data tables and 3 metadata tables!

## Stacking took 1.46806 secs

# if you aren't sure you can handle the data file size use check.size = T.

# save dataframes from the downloaded list

ind <- phe$phe_perindividual #individual information

status <- phe$phe_statusintensity #status & intensity info

##If choosing to use example dataset downloaded from this tutorial:

# Stack multiple files within the downloaded phenology data

#stackByTable("NEON-pheno-temp-timeseries_v2/filesToStack10055", folder = T)

# read in data - readTableNEON uses the variables file to assign the correct

# data type for each variable

#ind <- readTableNEON('NEON-pheno-temp-timeseries_v2/filesToStack10055/stackedFiles/phe_perindividual.csv', 'NEON-pheno-temp-timeseries_v2/filesToStack10055/stackedFiles/variables_10055.csv')

#status <- readTableNEON('NEON-pheno-temp-timeseries_v2/filesToStack10055/stackedFiles/phe_statusintensity.csv', 'NEON-pheno-temp-timeseries_v2/filesToStack10055/stackedFiles/variables_10055.csv')

Let's explore the data. Let's get to know what the ind dataframe looks like.

# What are the fieldnames in this dataset?

names(ind)

## [1] "uid" "namedLocation"

## [3] "domainID" "siteID"

## [5] "plotID" "decimalLatitude"

## [7] "decimalLongitude" "geodeticDatum"

## [9] "coordinateUncertainty" "elevation"

## [11] "elevationUncertainty" "subtypeSpecification"

## [13] "transectMeter" "directionFromTransect"

## [15] "ninetyDegreeDistance" "sampleLatitude"

## [17] "sampleLongitude" "sampleGeodeticDatum"

## [19] "sampleCoordinateUncertainty" "sampleElevation"

## [21] "sampleElevationUncertainty" "date"

## [23] "editedDate" "individualID"

## [25] "taxonID" "scientificName"

## [27] "identificationQualifier" "taxonRank"

## [29] "nativeStatusCode" "growthForm"

## [31] "vstTag" "samplingProtocolVersion"

## [33] "measuredBy" "identifiedBy"

## [35] "recordedBy" "remarks"

## [37] "dataQF" "publicationDate"

## [39] "release"

# Unsure of what some of the variables are you? Look at the variables table!

View(phe$variables_10055)

# if using the pre-downloaded data, you need to read in the variables file

# or open and look at it on your desktop

#var <- read.csv('NEON-pheno-temp-timeseries_v2/filesToStack10055/stackedFiles/variables_10055.csv')

#View(var)

# how many rows are in the data?

nrow(ind)

## [1] 433

# look at the first six rows of data.

#head(ind) #this is a good function to use but looks messy so not rendering it

# look at the structure of the dataframe.

str(ind)

## 'data.frame': 433 obs. of 39 variables:

## $ uid : chr "76bf37d9-c834-43fc-a430-83d87e4b9289" "cf0239bb-2953-44a8-8fd2-051539be5727" "833e5f41-d5cb-4550-ba60-e6f000a2b1b6" "6c2e348d-d19e-4543-9d22-0527819ee964" ...

## $ namedLocation : chr "BLAN_061.phenology.phe" "BLAN_061.phenology.phe" "BLAN_061.phenology.phe" "BLAN_061.phenology.phe" ...

## $ domainID : chr "D02" "D02" "D02" "D02" ...

## $ siteID : chr "BLAN" "BLAN" "BLAN" "BLAN" ...

## $ plotID : chr "BLAN_061" "BLAN_061" "BLAN_061" "BLAN_061" ...

## $ decimalLatitude : num 39.1 39.1 39.1 39.1 39.1 ...

## $ decimalLongitude : num -78.1 -78.1 -78.1 -78.1 -78.1 ...

## $ geodeticDatum : chr NA NA NA NA ...

## $ coordinateUncertainty : num NA NA NA NA NA NA NA NA NA NA ...

## $ elevation : num 183 183 183 183 183 183 183 183 183 183 ...

## $ elevationUncertainty : num NA NA NA NA NA NA NA NA NA NA ...

## $ subtypeSpecification : chr "primary" "primary" "primary" "primary" ...

## $ transectMeter : num 491 464 537 15 753 506 527 305 627 501 ...

## $ directionFromTransect : chr "Left" "Right" "Left" "Left" ...

## $ ninetyDegreeDistance : num 0.5 4 2 3 2 1 2 3 2 3 ...

## $ sampleLatitude : num NA NA NA NA NA NA NA NA NA NA ...

## $ sampleLongitude : num NA NA NA NA NA NA NA NA NA NA ...

## $ sampleGeodeticDatum : chr "WGS84" "WGS84" "WGS84" "WGS84" ...

## $ sampleCoordinateUncertainty: num NA NA NA NA NA NA NA NA NA NA ...

## $ sampleElevation : num NA NA NA NA NA NA NA NA NA NA ...

## $ sampleElevationUncertainty : num NA NA NA NA NA NA NA NA NA NA ...

## $ date : POSIXct, format: "2016-04-20" ...

## $ editedDate : POSIXct, format: "2016-05-09" ...

## $ individualID : chr "NEON.PLA.D02.BLAN.06290" "NEON.PLA.D02.BLAN.06501" "NEON.PLA.D02.BLAN.06204" "NEON.PLA.D02.BLAN.06223" ...

## $ taxonID : chr "RHDA" "SOAL6" "RHDA" "LOMA6" ...

## $ scientificName : chr "Rhamnus davurica Pall." "Solidago altissima L." "Rhamnus davurica Pall." "Lonicera maackii (Rupr.) Herder" ...

## $ identificationQualifier : chr NA NA NA NA ...

## $ taxonRank : chr "species" "species" "species" "species" ...

## $ nativeStatusCode : chr "I" "N" "I" "I" ...

## $ growthForm : chr "Deciduous broadleaf" "Forb" "Deciduous broadleaf" "Deciduous broadleaf" ...

## $ vstTag : chr NA NA NA NA ...

## $ samplingProtocolVersion : chr NA "NEON.DOC.014040vJ" "NEON.DOC.014040vJ" "NEON.DOC.014040vJ" ...

## $ measuredBy : chr "jcoloso@neoninc.org" "jward@battelleecology.org" "alandes@field-ops.org" "alandes@field-ops.org" ...

## $ identifiedBy : chr "shackley@neoninc.org" "llemmon@field-ops.org" "llemmon@field-ops.org" "llemmon@field-ops.org" ...

## $ recordedBy : chr "shackley@neoninc.org" NA NA NA ...

## $ remarks : chr "Nearly dead shaded out" "no entry" "no entry" "no entry" ...

## $ dataQF : chr NA NA NA NA ...

## $ publicationDate : chr "20201218T103411Z" "20201218T103411Z" "20201218T103411Z" "20201218T103411Z" ...

## $ release : chr "RELEASE-2021" "RELEASE-2021" "RELEASE-2021" "RELEASE-2021" ...

Notice that the neonUtilities package read the data type from the variables file and then automatically converts the data to the correct date type in R.

(Note that if you first opened your data file in Excel, you might see 06/14/2014 as the format instead of 2014-06-14. Excel can do some ~~weird~~ interesting things to dates.)

Phenology status

Now let's look at the status data.

# What variables are included in this dataset?

names(status)

## [1] "uid" "namedLocation"

## [3] "domainID" "siteID"

## [5] "plotID" "date"

## [7] "editedDate" "dayOfYear"

## [9] "individualID" "phenophaseName"

## [11] "phenophaseStatus" "phenophaseIntensityDefinition"

## [13] "phenophaseIntensity" "samplingProtocolVersion"

## [15] "measuredBy" "recordedBy"

## [17] "remarks" "dataQF"

## [19] "publicationDate" "release"

nrow(status)

## [1] 219357

#head(status) #this is a good function to use but looks messy so not rendering it

str(status)

## 'data.frame': 219357 obs. of 20 variables:

## $ uid : chr "b69ada55-41d1-41c7-9031-149c54de51f9" "9be6f7ad-4422-40ac-ba7f-e32e0184782d" "58e7aeaf-163c-4ea2-ad75-db79a580f2f8" "efe7ca02-d09e-4964-b35d-aebdac8f3efb" ...

## $ namedLocation : chr "BLAN_061.phenology.phe" "BLAN_061.phenology.phe" "BLAN_061.phenology.phe" "BLAN_061.phenology.phe" ...

## $ domainID : chr "D02" "D02" "D02" "D02" ...

## $ siteID : chr "BLAN" "BLAN" "BLAN" "BLAN" ...

## $ plotID : chr "BLAN_061" "BLAN_061" "BLAN_061" "BLAN_061" ...

## $ date : POSIXct, format: "2017-02-24" ...

## $ editedDate : POSIXct, format: "2017-03-31" ...

## $ dayOfYear : num 55 55 55 55 55 55 55 55 55 55 ...

## $ individualID : chr "NEON.PLA.D02.BLAN.06229" "NEON.PLA.D02.BLAN.06226" "NEON.PLA.D02.BLAN.06222" "NEON.PLA.D02.BLAN.06223" ...

## $ phenophaseName : chr "Leaves" "Leaves" "Leaves" "Leaves" ...

## $ phenophaseStatus : chr "no" "no" "no" "no" ...

## $ phenophaseIntensityDefinition: chr NA NA NA NA ...

## $ phenophaseIntensity : chr NA NA NA NA ...

## $ samplingProtocolVersion : chr NA NA NA NA ...

## $ measuredBy : chr "llemmon@neoninc.org" "llemmon@neoninc.org" "llemmon@neoninc.org" "llemmon@neoninc.org" ...

## $ recordedBy : chr "llemmon@neoninc.org" "llemmon@neoninc.org" "llemmon@neoninc.org" "llemmon@neoninc.org" ...

## $ remarks : chr NA NA NA NA ...

## $ dataQF : chr "legacyData" "legacyData" "legacyData" "legacyData" ...

## $ publicationDate : chr "20201217T203824Z" "20201217T203824Z" "20201217T203824Z" "20201217T203824Z" ...

## $ release : chr "RELEASE-2021" "RELEASE-2021" "RELEASE-2021" "RELEASE-2021" ...

# date range

min(status$date)

## [1] "2017-02-24 GMT"

max(status$date)

## [1] "2019-12-12 GMT"

Clean up the Data

- remove duplicates (full rows)

- convert to date format

- retain only the most recent

editedDatein the perIndividual and status table.

Remove Duplicates

The individual table (ind) file is included in each site by month-year file. As a result when all the tables are stacked there are many duplicates.

Let's remove any duplicates that exist.

# drop UID as that will be unique for duplicate records

ind_noUID <- select(ind, -(uid))

status_noUID <- select(status, -(uid))

# remove duplicates

## expect many

ind_noD <- distinct(ind_noUID)

nrow(ind_noD)

## [1] 433

status_noD<-distinct(status_noUID)

nrow(status_noD)

## [1] 216837

Variable Overlap between Tables

From the initial inspection of the data we can see there is overlap in variable names between the fields.

Let's see what they are.

# where is there an intersection of names

intersect(names(status_noD), names(ind_noD))

## [1] "namedLocation" "domainID"

## [3] "siteID" "plotID"

## [5] "date" "editedDate"

## [7] "individualID" "samplingProtocolVersion"

## [9] "measuredBy" "recordedBy"

## [11] "remarks" "dataQF"

## [13] "publicationDate" "release"

There are several fields that overlap between the datasets. Some of these are expected to be the same and will be what we join on.

However, some of these will have different values in each table. We want to keep those distinct value and not join on them. Therefore, we can rename these fields before joining:

- date

- editedDate

- measuredBy

- recordedBy

- samplingProtocolVersion

- remarks

- dataQF

- publicationDate

Now we want to rename the variables that would have duplicate names. We can rename all the variables in the status object to have "Stat" at the end of the variable name.

# in Status table rename like columns

status_noD <- rename(status_noD, dateStat=date,

editedDateStat=editedDate, measuredByStat=measuredBy,

recordedByStat=recordedBy,

samplingProtocolVersionStat=samplingProtocolVersion,

remarksStat=remarks, dataQFStat=dataQF,

publicationDateStat=publicationDate)

Filter to last editedDate

The individual (ind) table contains all instances that any of the location or

taxonomy data of an individual was updated. Therefore there are many rows for

some individuals. We only want the latest editedDate on ind.

# retain only the max of the date for each individualID

ind_last <- ind_noD %>%

group_by(individualID) %>%

filter(editedDate==max(editedDate))

# oh wait, duplicate dates, retain only the most recent editedDate

ind_lastnoD <- ind_last %>%

group_by(editedDate, individualID) %>%

filter(row_number()==1)

Join Dataframes

Now we can join the two data frames on all the variables with the same name.

We use a left_join() from the dpylr package because we want to match all the

rows from the "left" (first) dataframe to any rows that also occur in the "right"

(second) dataframe.

Check out RStudio's data wrangling (dplyr/tidyr) cheatsheet for other types of joins.

# Create a new dataframe "phe_ind" with all the data from status and some from ind_lastnoD

phe_ind <- left_join(status_noD, ind_lastnoD)

## Joining, by = c("namedLocation", "domainID", "siteID", "plotID", "individualID", "release")

Now that we have clean datasets we can begin looking into our particular data to address our research question: do plants show patterns of changes in phenophase across season?

Patterns in Phenophase

From our larger dataset (several sites, species, phenophases), let's create a

dataframe with only the data from a single site, species, and phenophase and

call it phe_1sp.

Select Site(s) of Interest

To do this, we'll first select our site of interest. Note how we set this up with an object that is our site of interest. This will allow us to more easily change which site or sites if we want to adapt our code later.

# set site of interest

siteOfInterest <- "SCBI"

# use filter to select only the site of Interest

## using %in% allows one to add a vector if you want more than one site.

## could also do it with == instead of %in% but won't work with vectors

phe_1st <- filter(phe_ind, siteID %in% siteOfInterest)

Select Species of Interest

Now we may only want to view a single species or a set of species. Let's first

look at the species that are present in our data. We could do this just by looking

at the taxonID field which give the four letter UDSA plant code for each

species. But if we don't know all the plant codes, we can get a bit fancier and

view both

# see which species are present - taxon ID only

unique(phe_1st$taxonID)

## [1] "JUNI" "MIVI" "LITU"

# or see which species are present with taxon ID + species name

unique(paste(phe_1st$taxonID, phe_1st$scientificName, sep=' - '))

## [1] "JUNI - Juglans nigra L."

## [2] "MIVI - Microstegium vimineum (Trin.) A. Camus"

## [3] "LITU - Liriodendron tulipifera L."

For now, let's choose only the flowering tree Liriodendron tulipifera (LITU).

By writing it this way, we could also add a list of species to the speciesOfInterest

object to select for multiple species.

speciesOfInterest <- "LITU"

#subset to just "LITU"

# here just use == but could also use %in%

phe_1sp <- filter(phe_1st, taxonID==speciesOfInterest)

# check that it worked

unique(phe_1sp$taxonID)

## [1] "LITU"

Select Phenophase of Interest

And, perhaps a single phenophase.

# see which phenophases are present

unique(phe_1sp$phenophaseName)

## [1] "Open flowers" "Breaking leaf buds"

## [3] "Colored leaves" "Increasing leaf size"

## [5] "Falling leaves" "Leaves"

phenophaseOfInterest <- "Leaves"

#subset to just the phenosphase of interest

phe_1sp <- filter(phe_1sp, phenophaseName %in% phenophaseOfInterest)

# check that it worked

unique(phe_1sp$phenophaseName)

## [1] "Leaves"

Select only Primary Plots

NEON plant phenology observations are collected along two types of plots.

- Primary plots: an 800 meter square phenology loop transect

- Phenocam plots: a 200 m x 200 m plot located within view of a canopy level, tower-mounted, phenology camera

In the data, these plots are differentiated by the subtypeSpecification.

Depending on your question you may want to use only one or both of these plot types.

For this activity, we're going to only look at the primary plots.

# what plots are present?

unique(phe_1sp$subtypeSpecification)

## [1] "primary" "phenocam"

# filter

phe_1spPrimary <- filter(phe_1sp, subtypeSpecification == 'primary')

# check that it worked

unique(phe_1spPrimary$subtypeSpecification)

## [1] "primary"

Total in Phenophase of Interest

The phenophaseState is recorded as "yes" or "no" that the individual is in that

phenophase. The phenophaseIntensity are categories for how much of the individual

is in that state. For now, we will stick with phenophaseState.

We can now calculate the total number of individuals with that state. We use

n_distinct(indvidualID) count the individuals (and not the records) in case

there are duplicate records for an individual.

But later on we'll also want to calculate the percent of the observed individuals in the "leaves" status, therefore, we're also adding in a step here to retain the sample size so that we can calculate % later.

Here we use pipes %>% from the dpylr package to "pass" objects onto the next

function.

# Calculate sample size for later use

sampSize <- phe_1spPrimary %>%

group_by(dateStat) %>%

summarise(numInd= n_distinct(individualID))

# Total in status by day for distinct individuals

inStat <- phe_1spPrimary%>%

group_by(dateStat, phenophaseStatus)%>%

summarise(countYes=n_distinct(individualID))

## `summarise()` has grouped output by 'dateStat'. You can override using the `.groups` argument.

inStat <- full_join(sampSize, inStat, by="dateStat")

# Retain only Yes

inStat_T <- filter(inStat, phenophaseStatus %in% "yes")

# check that it worked

unique(inStat_T$phenophaseStatus)

## [1] "yes"

Now that we have the data we can plot it.

Plot with ggplot

The ggplot() function within the ggplot2 package gives us considerable control

over plot appearance. Three basic elements are needed for ggplot() to work:

- The data_frame: containing the variables that we wish to plot,

-

aes(aesthetics): which denotes which variables will map to the x-, y- (and other) axes, -

geom_XXXX(geometry): which defines the data's graphical representation (e.g. points (geom_point), bars (geom_bar), lines (geom_line), etc).

The syntax begins with the base statement that includes the data_frame

(inStat_T) and associated x (date) and y (n) variables to be

plotted:

ggplot(inStat_T, aes(date, n))

Bar Plots with ggplot

To successfully plot, the last piece that is needed is the geometry type.

To create a bar plot, we set the geom element from to geom_bar().

The default setting for a ggplot bar plot - geom_bar() - is a histogram

designated by stat="bin". However, in this case, we want to plot count values.

We can use geom_bar(stat="identity") to force ggplot to plot actual values.

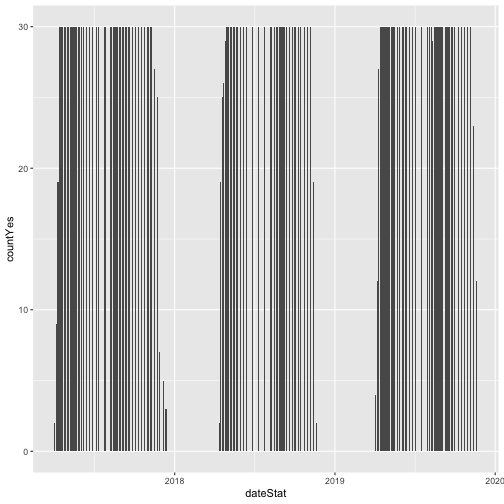

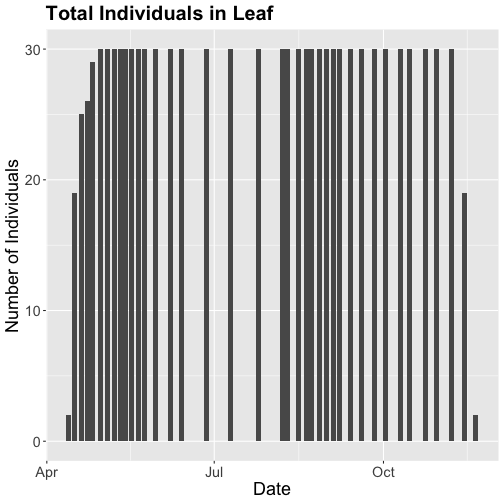

# plot number of individuals in leaf

phenoPlot <- ggplot(inStat_T, aes(dateStat, countYes)) +

geom_bar(stat="identity", na.rm = TRUE)

phenoPlot

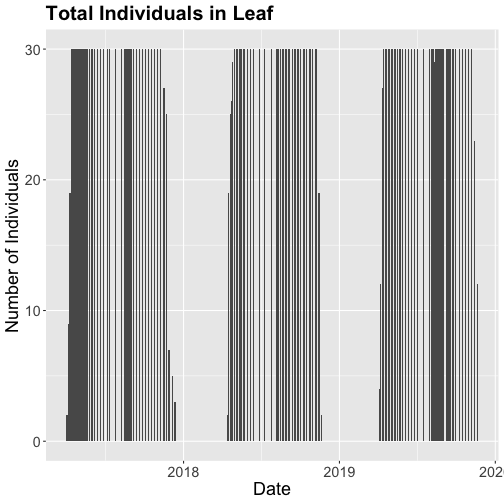

# Now let's make the plot look a bit more presentable

phenoPlot <- ggplot(inStat_T, aes(dateStat, countYes)) +

geom_bar(stat="identity", na.rm = TRUE) +

ggtitle("Total Individuals in Leaf") +

xlab("Date") + ylab("Number of Individuals") +

theme(plot.title = element_text(lineheight=.8, face="bold", size = 20)) +

theme(text = element_text(size=18))

phenoPlot

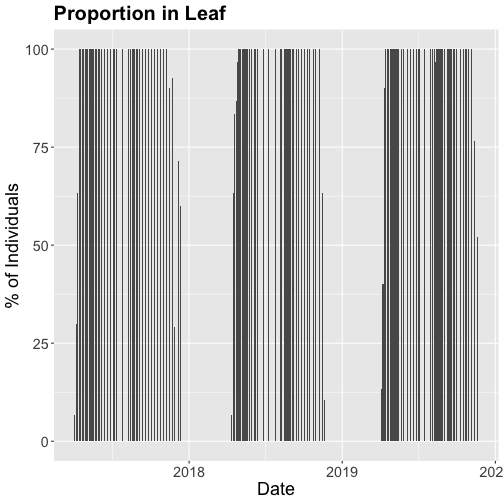

We could also covert this to percentage and plot that.

# convert to percent

inStat_T$percent<- ((inStat_T$countYes)/inStat_T$numInd)*100

# plot percent of leaves

phenoPlot_P <- ggplot(inStat_T, aes(dateStat, percent)) +

geom_bar(stat="identity", na.rm = TRUE) +

ggtitle("Proportion in Leaf") +

xlab("Date") + ylab("% of Individuals") +

theme(plot.title = element_text(lineheight=.8, face="bold", size = 20)) +

theme(text = element_text(size=18))

phenoPlot_P

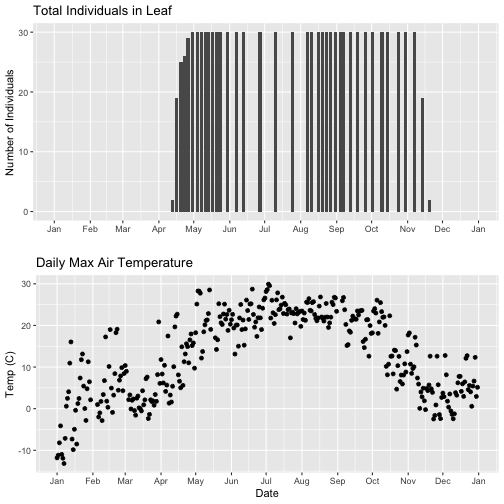

The plots demonstrate the nice expected pattern of increasing leaf-out, peak, and drop-off.

Drivers of Phenology

Now that we see that there are differences in and shifts in phenophases, what are the drivers of phenophases?

The NEON phenology measurements track sensitive and easily observed indicators of biotic responses to meteorological variability by monitoring the timing and duration of phenological stages in plant communities. Plant phenology is affected by forces such as temperature, timing and duration of pest infestations and disease outbreaks, water fluxes, nutrient budgets, carbon dynamics, and food availability and has feedbacks to trophic interactions, carbon sequestration, community composition and ecosystem function. (quoted from Plant Phenology Observations user guide.)

Filter by Date

In the next part of this series, we will be exploring temperature as a driver of phenology. Temperature date is quite large (NEON provides this in 1 minute or 30 minute intervals) so let's trim our phenology date down to only one year so that we aren't working with as large a data.

Let's filter to just 2018 data.

# use filter to select only the date of interest

phe_1sp_2018 <- filter(inStat_T, dateStat >= "2018-01-01" & dateStat <= "2018-12-31")

# did it work?

range(phe_1sp_2018$dateStat)

## [1] "2018-04-13 GMT" "2018-11-20 GMT"

How does that look?

# Now let's make the plot look a bit more presentable

phenoPlot18 <- ggplot(phe_1sp_2018, aes(dateStat, countYes)) +

geom_bar(stat="identity", na.rm = TRUE) +

ggtitle("Total Individuals in Leaf") +

xlab("Date") + ylab("Number of Individuals") +

theme(plot.title = element_text(lineheight=.8, face="bold", size = 20)) +

theme(text = element_text(size=18))

phenoPlot18

Now that we've filtered down to just the 2018 data from SCBI for LITU in leaf, we may want to save that subsetted data for another use. To do that you can write the data frame to a .csv file.

You do not need to follow this step if you are continuing on to the next tutorials in this series as you already have the data frame in your environment. Of course if you close R and then come back to it, you will need to re-load this data and instructions for that are provided in the relevant tutorials.

# Write .csv - this step is optional

# This will write to your current working directory, change as desired.

write.csv( phe_1sp_2018 , file="NEONpheno_LITU_Leaves_SCBI_2018.csv", row.names=F)

#If you are using the downloaded example date, this code will write it to the

# pheno data file. Note - this file is already a part of the download.

#write.csv( phe_1sp_2018 , file="NEON-pheno-temp-timeseries_v2/NEONpheno_LITU_Leaves_SCBI_2018.csv", row.names=F)

Get Lesson Code

Work with NEON's Single-Aspirated Air Temperature Data

Authors: Lee Stanish, Megan A. Jones, Natalie Robinson

Last Updated: Apr 10, 2025

In this tutorial, we explore the NEON single-aspirated air temperature data. We then discuss how to interpret the variables, how to work with date-time and date formats, and finally how to plot the data.

This tutorial is part of a series on how to work with both discrete and continuous time series data with NEON plant phenology and temperature data products.

Objectives

After completing this activity, you will be able to:

- work with "stacked" NEON Single-Aspirated Air Temperature data.

- correctly format date-time data.

- use dplyr functions to filter data.

- plot time series data in scatter plots using ggplot function.

Things You’ll Need To Complete This Tutorial

You will need the most current version of R and, preferably, RStudio loaded

on your computer to complete this tutorial.

Install R Packages

-

neonUtilities:

install.packages("neonUtilities") -

ggplot2:

install.packages("ggplot2") -

dplyr:

install.packages("dplyr") -

tidyr:

install.packages("tidyr")

More on Packages in R – Adapted from Software Carpentry.

Additional Resources

- NEON data portal

- RStudio's data wrangling (dplyr/tidyr) cheatsheet

- NEONScience GitHub Organization

- nneo API wrapper on CRAN

- RStudio's data wrangling (dplyr/tidyr) cheatsheet

- Hadley Wickham's

documentation

on the

ggplot2package. - Winston Chang's

Background Information About NEON Air Temperature Data

Air temperature is continuously monitored by NEON by two methods. At terrestrial sites temperature at the top of the tower is derived from a triple redundant aspirated air temperature sensor. This is provided as NEON data product DP1.00003.001. Single Aspirated Air Temperature sensors (SAAT) are deployed to develop temperature profiles at multiple levels on the tower at NEON terrestrial sites and on the meteorological stations at NEON aquatic sites. This is provided as NEON data product DP1.00002.001.

When designing a research project using this data, consult the Data Product Details Page for more detailed documentation.

Single-aspirated Air Temperature

Air temperature profiles are ascertained by deploying SAATs at various heights on NEON tower infrastructure. Air temperature at aquatic sites is measured using a single SAAT at a standard height of 3m above ground level. Air temperature for this data product is provided as one- and thirty-minute averages of 1 Hz observations. Temperature observations are made using platinum resistance thermometers, which are housed in a fan aspirated shield to reduce radiative heating. The temperature is measured in Ohms and subsequently converted to degrees Celsius during data processing. Details on the conversion can be found in the associated Algorithm Theoretic Basis Document (ATBD; see Product Details page linked above).

Available Data Tables

The SAAT data product contains two data tables for each site and month selected, consisting of the 1-minute and 30-minute averaging intervals. In addition, there are several metadata files that provide additional useful information.

- readme with information on the data product and the download

- variables file that defines the terms, data types, and units

- EML file with machine readable metadata in standardized Ecological Metadata Language

Access NEON Data

There are several ways to access NEON data, directly from the NEON data portal, access through a data partner (select data products only), writing code to directly pull data from the NEON API, or, as we'll do here, using the neonUtilities package which is a wrapper for the API to make working with the data easier.

Downloading from the Data Portal

If you prefer to download data from the data portal, please review the Getting started and Stack the downloaded data sections of the Download and Explore NEON Data tutorial. This will get you to the point where you can download data from sites or dates of interest and resume this tutorial.

Downloading Data Using neonUtilities

First, we need to set up our environment with the packages needed for this tutorial.

# Install needed package (only uncomment & run if not already installed)

#install.packages("neonUtilities")

#install.packages("ggplot2")

#install.packages("dplyr")

#install.packages("tidyr")

# Load required libraries

library(neonUtilities) # for accessing NEON data

library(ggplot2) # for plotting

library(dplyr) # for data munging

library(tidyr) # for data munging

# set working directory

# this step is optional, only needed if you plan to save the

# data files at the end of the tutorial

wd <- "~/data" # enter your working directory here

setwd(wd)

This tutorial is part of series working with discrete plant phenology data and (nearly) continuous temperature data. Our overall "research" question is to see if there is any correlation between plant phenology and temperature. Therefore, we will want to work with data that align with the plant phenology data that we worked with in the first tutorial. If you are only interested in working with the temperature data, you do not need to complete the previous tutorial.

Our data of interest will be the temperature data from 2018 from NEON's Smithsonian Conservation Biology Institute (SCBI) field site located in Virginia near the northern terminus of the Blue Ridge Mountains.

NEON single aspirated air temperature data is available in two averaging intervals, 1 minute and 30 minute intervals. Which data you want to work with is going to depend on your research questions. Here, we're going to only download and work with the 30 minute interval data as we're primarily interest in longer term (daily, weekly, annual) patterns.

This will download 7.7 MB of data. check.size is set to false (F) to improve flow

of the script but is always a good idea to view the size with true (T) before

downloading a new dataset.

# download data of interest - Single Aspirated Air Temperature

saat <- loadByProduct(dpID="DP1.00002.001", site="SCBI",

startdate="2018-01", enddate="2018-12",

package="basic", timeIndex="30",

check.size = F)

Explore Temperature Data

Now that you have the data, let's take a look at the structure and understand

what's in the data. The data (saat) come in as a large list of four items.

View(saat)

So what exactly are these five files and why would you want to use them?

-

data file(s): There will always be one or more dataframes that include the

primary data of the data product you downloaded. Since we downloaded only the 30

minute averaged data we only have one data table

SAAT_30min. - readme_xxxxx: The readme file, with the corresponding 5 digits from the data product number, provides you with important information relevant to the data product and the specific instance of downloading the data.

- sensor_positions_xxxxx: This table contains the spatial coordinates of each sensor, relative to a reference location.

- variables_xxxxx: This table contains all the variables found in the associated data table(s). This includes full definitions, units, and rounding.

- issueLog_xxxxx: This table contains records of any known issues with the data product, such as sensor malfunctions.

- scienceReviewFlags_xxxxx: This table may or may not be present. It contains descriptions of adverse events that led to manual flagging of the data, and is usually more detailed than the issue log. It only contains records relevant to the sites and dates of data downloaded.

Since we want to work with the individual files, let's make the elements of the list into independent objects.

list2env(saat, .GlobalEnv)

## <environment: R_GlobalEnv>

Now let's take a look at the data table.

str(SAAT_30min)

## 'data.frame': 87600 obs. of 16 variables:

## $ domainID : chr "D02" "D02" "D02" "D02" ...

## $ siteID : chr "SCBI" "SCBI" "SCBI" "SCBI" ...

## $ horizontalPosition : chr "000" "000" "000" "000" ...

## $ verticalPosition : chr "010" "010" "010" "010" ...

## $ startDateTime : POSIXct, format: "2018-01-01 00:00:00" "2018-01-01 00:30:00" "2018-01-01 01:00:00" ...

## $ endDateTime : POSIXct, format: "2018-01-01 00:30:00" "2018-01-01 01:00:00" "2018-01-01 01:30:00" ...

## $ tempSingleMean : num -11.8 -11.8 -12 -12.2 -12.4 ...

## $ tempSingleMinimum : num -12.1 -12.2 -12.3 -12.6 -12.8 ...

## $ tempSingleMaximum : num -11.4 -11.3 -11.3 -11.7 -12.1 ...

## $ tempSingleVariance : num 0.0208 0.0315 0.0412 0.0393 0.0361 0.0289 0.0126 0.0211 0.0115 0.0022 ...

## $ tempSingleNumPts : num 1800 1800 1800 1800 1800 1800 1800 1800 1800 1800 ...

## $ tempSingleExpUncert: num 0.13 0.13 0.13 0.13 0.129 ...

## $ tempSingleStdErMean: num 0.0034 0.0042 0.0048 0.0047 0.0045 0.004 0.0026 0.0034 0.0025 0.0011 ...

## $ finalQF : num 0 0 0 0 0 0 0 0 0 0 ...

## $ publicationDate : chr "20221210T185420Z" "20221210T185420Z" "20221210T185420Z" "20221210T185420Z" ...

## $ release : chr "undetermined" "undetermined" "undetermined" "undetermined" ...

Quality Flags

The sensor data undergo a variety of automated quality assurance and quality control

checks. You can read about them in detail in the Quality Flags and Quality Metrics ATBD, in the Documentation section of the product details page.

The expanded data package

includes all of these quality flags, which can allow you to decide if not passing

one of the checks will significantly hamper your research and if you should

therefore remove the data from your analysis. Here, we're using the

basic data package, which only includes the final quality flag (finalQF),

which is aggregated from the full set of quality flags.

A pass of the check is 0, while a fail is 1. Let's see what percentage of the data we downloaded passed the quality checks.

sum(SAAT_30min$finalQF==1)/nrow(SAAT_30min)

## [1] 0.2340297

What should we do with the 23% of the data that are flagged? This may depend on why it is flagged and what questions you are asking, and the expanded data package would be useful for determining this.

For now, for demonstration purposes, we'll keep the flagged data.

What about null (NA) data?

sum(is.na(SAAT_30min$tempSingleMean))/nrow(SAAT_30min)

## [1] 0.2239269

mean(SAAT_30min$tempSingleMean)

## [1] NA

22% of the mean temperature values are NA. Note that this is not

additive with the flagged data! Empty data records are flagged, so this

indicates nearly all of the flagged data in our download are empty records.

Why was there no output from the calculation of mean temperature?

The R programming language, by default, won't calculate a mean (and many other

summary statistics) in data that contain NA values. We could override this

using the input parameter na.rm=TRUE in the mean() function, or just

remove the empty values from our analysis.

# create new dataframe without NAs

SAAT_30min_noNA <- SAAT_30min %>%

drop_na(tempSingleMean) # tidyr function

# alternate base R

# SAAT_30min_noNA <- SAAT_30min[!is.na(SAAT_30min$tempSingleMean),]

# did it work?

sum(is.na(SAAT_30min_noNA$tempSingleMean))

## [1] 0

Scatterplots with ggplot

We can use ggplot to create scatter plots. Which data should we plot, as we have several options?

- tempSingleMean: the mean temperature for the interval

- tempSingleMinimum: the minimum temperature during the interval

- tempSingleMaximum: the maximum temperature for the interval

Depending on exactly what question you are asking you may prefer to use one over the other. For many applications, the mean temperature of the 1- or 30-minute interval will provide the best representation of the data.

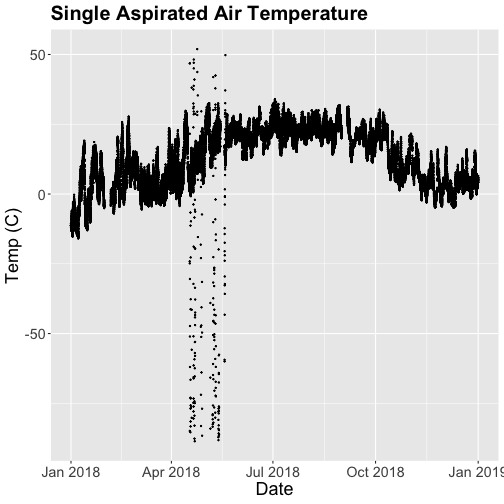

Let's plot it. (This is a plot of a large amount of data. It can take 1-2 mins to process. It is not essential for completing the next steps if this takes too much of your computer memory.)

# plot temp data

tempPlot <- ggplot(SAAT_30min, aes(startDateTime, tempSingleMean)) +

geom_point(size=0.3) +

ggtitle("Single Aspirated Air Temperature") +

xlab("Date") + ylab("Temp (C)") +

theme(plot.title = element_text(lineheight=.8, face="bold", size = 20)) +

theme(text = element_text(size=18))

tempPlot

## Warning: Removed 19616 rows containing missing values (`geom_point()`).

What patterns can you see in the data?

Something odd seems to have happened in late April/May 2018. Since it is unlikely Virginia experienced -50C during this time, these are probably erroneous sensor readings and why we should probably remove data that are flagged with those quality flags.

Right now we are also looking at all the data points in the dataset. However, we may want to view or aggregate the data differently:

- aggregated data: min, mean, or max over a some duration

- the number of days since a freezing temperatures

- or some other segregation of the data.

Given that in the previous tutorial, Work With NEON's Plant Phenology Data, we were working with phenology data collected on a daily scale let's aggregate to that level.

To make this plot better, lets do two things

- Remove flagged data

- Aggregate to a daily mean.

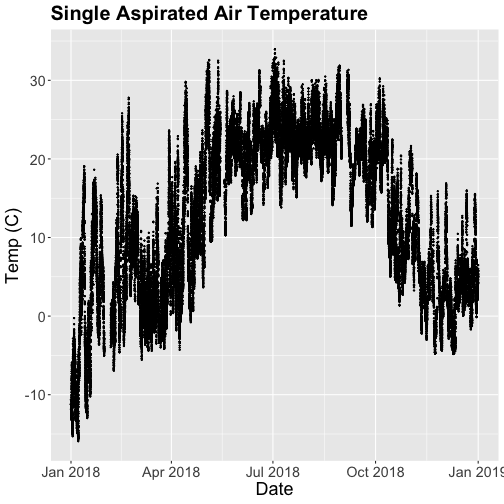

Subset to remove quality flagged data

We already removed the empty records. Now we'll subset the data to remove the remaining flagged data.

# subset and add C to name for "clean"

SAAT_30minC <- filter(SAAT_30min_noNA, SAAT_30min_noNA$finalQF==0)

# Do any quality flags remain?

sum(SAAT_30minC$finalQF==1)

## [1] 0

Now we can plot only the unflagged data.

# plot temp data

tempPlot <- ggplot(SAAT_30minC, aes(startDateTime, tempSingleMean)) +

geom_point(size=0.3) +

ggtitle("Single Aspirated Air Temperature") +

xlab("Date") + ylab("Temp (C)") +

theme(plot.title = element_text(lineheight=.8, face="bold", size = 20)) +

theme(text = element_text(size=18))

tempPlot

That looks better! But we're still working with the 30-minute data.

Aggregate Data by Day

We can use the dplyr package functions to aggregate the data. However, we have to choose which data we want to aggregate. Again, you might want daily minimum temps, mean temperature or maximum temps depending on your question.

In the context of phenology, minimum temperatures might be very important if you are interested in a species that is very frost susceptible. Any days with a minimum temperature below 0C could dramatically change the phenophase. For other species or meteorological zones, maximum thresholds may be very important. Or you might be mostinterested in the daily mean.

And note that you can combine different input values with different aggregation functions - for example, you could calculate the minimum of the half-hourly average temperature, or the average of the half-hourly maximum temperature.

For this tutorial, let's use maximum daily temperature, i.e. the maximum of the

tempSingleMax values for the day.

# convert to date, easier to work with

SAAT_30minC$Date <- as.Date(SAAT_30minC$startDateTime)

# max of mean temp each day

temp_day <- SAAT_30minC %>%

group_by(Date) %>%

distinct(Date, .keep_all=T) %>%

mutate(dayMax=max(tempSingleMaximum))

Now we can plot the cleaned up daily temperature.

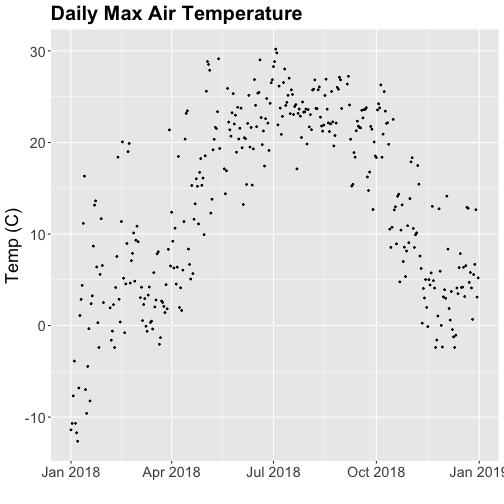

# plot Air Temperature Data across 2018 using daily data

tempPlot_dayMax <- ggplot(temp_day, aes(Date, dayMax)) +

geom_point(size=0.5) +

ggtitle("Daily Max Air Temperature") +

xlab("") + ylab("Temp (C)") +

theme(plot.title = element_text(lineheight=.8, face="bold", size = 20)) +

theme(text = element_text(size=18))

tempPlot_dayMax

Thought questions:

- What do we gain by this visualization?

- What do we lose relative to the 30 minute intervals?

ggplot - Subset by Time

Sometimes we want to scale the x- or y-axis to a particular time subset without

subsetting the entire data_frame. To do this, we can define start and end

times. We can then define these limits in the scale_x_date object as

follows:

scale_x_date(limits=start.end) +

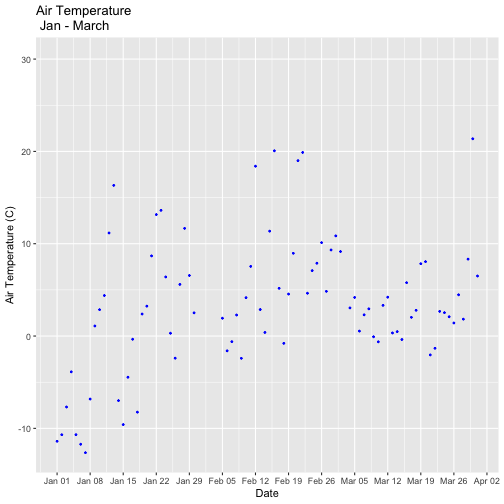

Let's plot just the first three months of the year.

# Define Start and end times for the subset as R objects that are the time class

startTime <- as.Date("2018-01-01")

endTime <- as.Date("2018-03-31")

# create a start and end time R object

start.end <- c(startTime,endTime)

str(start.end)

## Date[1:2], format: "2018-01-01" "2018-03-31"

# View data for first 3 months only

# And we'll add some color for a change.

tempPlot_dayMax3m <- ggplot(temp_day, aes(Date, dayMax)) +

geom_point(color="blue", size=0.5) +

ggtitle("Air Temperature\n Jan - March") +

xlab("Date") + ylab("Air Temperature (C)")+

(scale_x_date(limits=start.end,

date_breaks="1 week",

date_labels="%b %d"))

tempPlot_dayMax3m

## Warning: Removed 268 rows containing missing values (`geom_point()`).

Now we have the temperature data matching our Phenology data from the previous tutorial, we want to save it to our computer to use in future analyses (or the next tutorial). This is optional if you are continuing directly to the next tutorial as you already have the data in R.

# Write .csv - this step is optional

# This will write to the working directory we set at the start of the tutorial

write.csv(temp_day , file="NEONsaat_daily_SCBI_2018.csv", row.names=F)

Get Lesson Code

Plot Continuous & Discrete Data Together

Authors: Lee Stanish, Megan A. Jones, Natalie Robinson

Last Updated: May 7, 2021

This tutorial discusses ways to plot plant phenology (discrete time series) and single-aspirated temperature (continuous time series) together. It uses data frames created in the first two parts of this series, Work with NEON OS & IS Data - Plant Phenology & Temperature. If you have not completed these tutorials, please download the dataset below.

Objectives

After completing this tutorial, you will be able to:

- plot multiple figures together with grid.arrange()

- plot only a subset of dates

Things You’ll Need To Complete This Tutorial

You will need the most current version of R and, preferably, RStudio loaded

on your computer to complete this tutorial.

Install R Packages

-

neonUtilities:

install.packages("neonUtilities") -

ggplot2:

install.packages("ggplot2") -

dplyr:

install.packages("dplyr") -

gridExtra:

install.packages("gridExtra")

More on Packages in R – Adapted from Software Carpentry.

Download Data

This tutorial is designed to have you download data directly from the NEON

portal API using the neonUtilities package. However, you can also directly

download this data, prepackaged, from FigShare. This data set includes all the

files needed for the Work with NEON OS & IS Data - Plant Phenology & Temperature

tutorial series. The data are in the format you would receive if downloading them

using the zipsByProduct() function in the neonUtilities package.

To start, we need to set up our R environment. If you're continuing from the previous tutorial in this series, you'll only need to load the new packages.

# Install needed package (only uncomment & run if not already installed)

#install.packages("dplyr")

#install.packages("ggplot2")

#install.packages("scales")

# Load required libraries

library(ggplot2)

library(dplyr)

##

## Attaching package: 'dplyr'

## The following objects are masked from 'package:stats':

##

## filter, lag

## The following objects are masked from 'package:base':

##

## intersect, setdiff, setequal, union

library(gridExtra)

##

## Attaching package: 'gridExtra'

## The following object is masked from 'package:dplyr':

##

## combine

library(scales)

options(stringsAsFactors=F) #keep strings as character type not factors

# set working directory to ensure R can find the file we wish to import and where

# we want to save our files. Be sure to move the download into your working directory!

wd <- "~/Documents/data/" # Change this to match your local environment

setwd(wd)

If you don't already have the R objects, temp_day and phe_1sp_2018, loaded

you'll need to load and format those data. If you do, you can skip this code.

# Read in data -> if in series this is unnecessary

temp_day <- read.csv(paste0(wd,'NEON-pheno-temp-timeseries/NEONsaat_daily_SCBI_2018.csv'))

phe_1sp_2018 <- read.csv(paste0(wd,'NEON-pheno-temp-timeseries/NEONpheno_LITU_Leaves_SCBI_2018.csv'))

# Convert dates

temp_day$Date <- as.Date(temp_day$Date)

# use dateStat - the date the phenophase status was recorded

phe_1sp_2018$dateStat <- as.Date(phe_1sp_2018$dateStat)

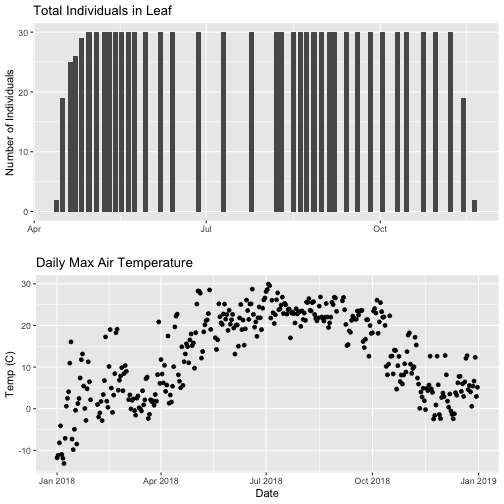

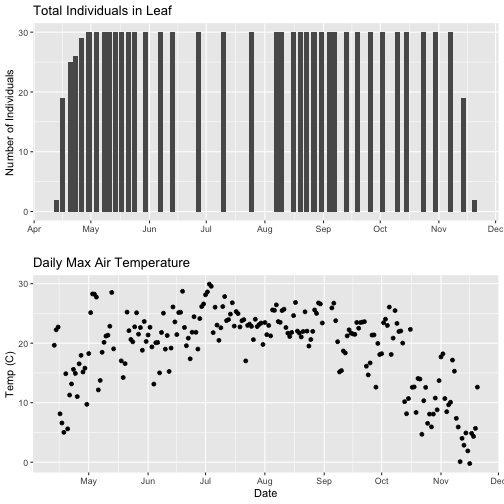

Separate Plots, Same Panel

In this dataset, we have phenology and temperature data from the Smithsonian Conservation Biology Institute (SCBI) NEON field site. There are a variety of ways we may want to look at this data, including aggregated at the site level, by a single plot, or viewing all plots at the same time but in separate plots. In the Work With NEON's Plant Phenology Data and the Work with NEON's Single-Aspirated Air Temperature Data tutorials, we created separate plots of the number of individuals who had leaves at different times of the year and the temperature in 2018.

However, plot the data next to each other to aid comparisons. The grid.arrange()

function from the gridExtra package can help us do this.

# first, create one plot

phenoPlot <- ggplot(phe_1sp_2018, aes(dateStat, countYes)) +

geom_bar(stat="identity", na.rm = TRUE) +

ggtitle("Total Individuals in Leaf") +

xlab("") + ylab("Number of Individuals")

# create second plot of interest

tempPlot_dayMax <- ggplot(temp_day, aes(Date, dayMax)) +

geom_point() +

ggtitle("Daily Max Air Temperature") +

xlab("Date") + ylab("Temp (C)")

# Then arrange the plots - this can be done with >2 plots as well.

grid.arrange(phenoPlot, tempPlot_dayMax)

Now, we can see both plots in the same window. But, hmmm... the x-axis on both plots is kinda wonky. We want the same spacing in the scale across the year (e.g., July in one should line up with July in the other) plus we want the dates to display in the same format(e.g. 2016-07 vs. Jul vs Jul 2018).

Format Dates in Axis Labels

The date format parameter can be adjusted with scale_x_date. Let's format the x-axis

ticks so they read "month" (%b) in both graphs. We will use the syntax:

scale_x_date(labels=date_format("%b"")

Rather than re-coding the entire plot, we can add the scale_x_date element

to the plot object phenoPlot we just created.

-

You can type

?strptimeinto the R console to find a list of date format conversion specifications (e.g. %b = month). Typescale_x_datefor a list of parameters that allow you to format dates on the x-axis. -

If you are working with a date & time class (e.g. POSIXct), you can use

scale_x_datetimeinstead ofscale_x_date.

# format x-axis: dates

phenoPlot <- phenoPlot +

(scale_x_date(breaks = date_breaks("1 month"), labels = date_format("%b")))

tempPlot_dayMax <- tempPlot_dayMax +

(scale_x_date(breaks = date_breaks("1 month"), labels = date_format("%b")))

# New plot.

grid.arrange(phenoPlot, tempPlot_dayMax)

But this only solves one of the problems, we still have a different range on the x-axis which makes it harder to see trends.

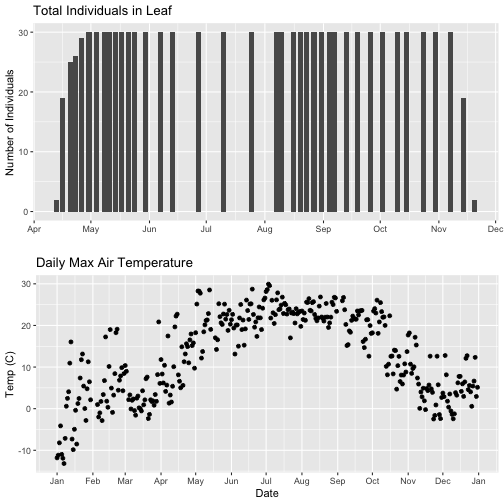

Align data sets with different start dates

Now let's work to align the values on the x-axis. We can do this in two ways,

- setting the x-axis to have the same date range or 2) by filtering the dataset itself to only include the overlapping data. Depending on what you are trying to demonstrate and if you're doing additional analyses and want only the overlapping data, you may prefer one over the other. Let's try both.

Set range of x-axis

Alternatively, we can set the x-axis range for both plots by adding the limits

parameter to the scale_x_date() function.

# first, lets recreate the full plot and add in the

phenoPlot_setX <- ggplot(phe_1sp_2018, aes(dateStat, countYes)) +

geom_bar(stat="identity", na.rm = TRUE) +

ggtitle("Total Individuals in Leaf") +

xlab("") + ylab("Number of Individuals") +

scale_x_date(breaks = date_breaks("1 month"),

labels = date_format("%b"),

limits = as.Date(c('2018-01-01','2018-12-31')))

# create second plot of interest

tempPlot_dayMax_setX <- ggplot(temp_day, aes(Date, dayMax)) +

geom_point() +

ggtitle("Daily Max Air Temperature") +

xlab("Date") + ylab("Temp (C)") +

scale_x_date(date_breaks = "1 month",

labels=date_format("%b"),

limits = as.Date(c('2018-01-01','2018-12-31')))

# Plot

grid.arrange(phenoPlot_setX, tempPlot_dayMax_setX)

Now we can really see the pattern over the full year. This emphasizes the point that during much of the late fall, winter, and early spring none of the trees have leaves on them (or that data were not collected - this plot would not distinguish between the two).

Subset one data set to match other

Alternatively, we can simply filter the dataset with the larger date range so the we only plot the data from the overlapping dates.

# filter to only having overlapping data

temp_day_filt <- filter(temp_day, Date >= min(phe_1sp_2018$dateStat) &

Date <= max(phe_1sp_2018$dateStat))

# Check

range(phe_1sp_2018$date)

## [1] "2018-04-13" "2018-11-20"

range(temp_day_filt$Date)

## [1] "2018-04-13" "2018-11-20"

#plot again

tempPlot_dayMaxFiltered <- ggplot(temp_day_filt, aes(Date, dayMax)) +

geom_point() +

scale_x_date(breaks = date_breaks("months"), labels = date_format("%b")) +

ggtitle("Daily Max Air Temperature") +

xlab("Date") + ylab("Temp (C)")

grid.arrange(phenoPlot, tempPlot_dayMaxFiltered)

With this plot, we really look at the area of overlap in the plotted data (but this does cut out the time where the data are collected but not plotted).

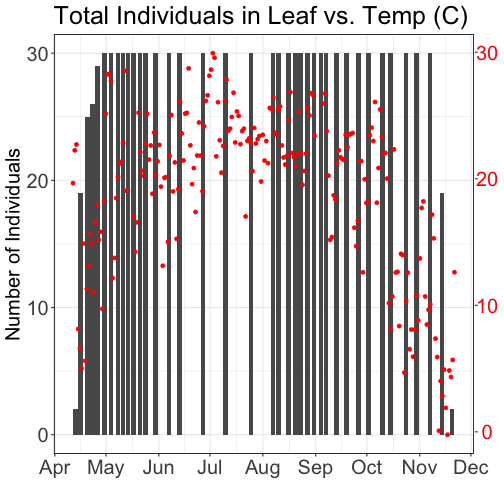

Same plot with two Y-axes

What about layering these plots and having two y-axes (right and left) that have the different scale bars?

Some argue that you should not do this as it can distort what is actually going

on with the data. The author of the ggplot2 package is one of these individuals.

Therefore, you cannot use ggplot() to create a single plot with multiple y-axis

scales. You can read his own discussion of the topic on this

StackOverflow post.

However, individuals have found work arounds for these plots. The code below is provided as a demonstration of this capability. Note, by showing this code here, we don't necessarily endorse having plots with two y-axes.

This code is adapted from code by Jake Heare.

# Source: http://heareresearch.blogspot.com/2014/10/10-30-2014-dual-y-axis-graph-ggplot2_30.html

# Additional packages needed

library(gtable)

library(grid)

# Plot 1: Pheno data as bars, temp as scatter

grid.newpage()

phenoPlot_2 <- ggplot(phe_1sp_2018, aes(dateStat, countYes)) +

geom_bar(stat="identity", na.rm = TRUE) +

scale_x_date(breaks = date_breaks("1 month"), labels = date_format("%b")) +

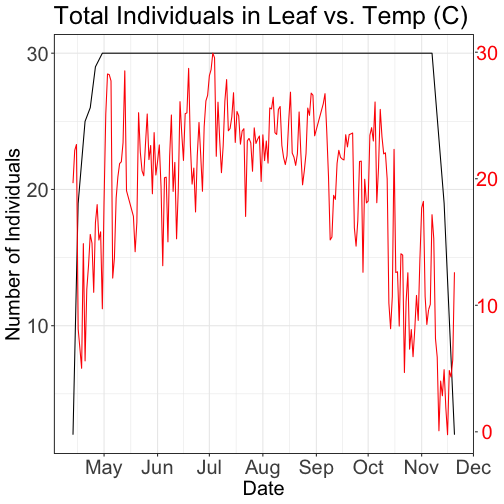

ggtitle("Total Individuals in Leaf vs. Temp (C)") +

xlab(" ") + ylab("Number of Individuals") +

theme_bw()+

theme(legend.justification=c(0,1),

legend.position=c(0,1),

plot.title=element_text(size=25,vjust=1),

axis.text.x=element_text(size=20),

axis.text.y=element_text(size=20),

axis.title.x=element_text(size=20),

axis.title.y=element_text(size=20))

tempPlot_dayMax_corr_2 <- ggplot() +

geom_point(data = temp_day_filt, aes(Date, dayMax),color="red") +

scale_x_date(breaks = date_breaks("months"), labels = date_format("%b")) +

xlab("") + ylab("Temp (C)") +

theme_bw() %+replace%

theme(panel.background = element_rect(fill = NA),

panel.grid.major.x=element_blank(),

panel.grid.minor.x=element_blank(),

panel.grid.major.y=element_blank(),

panel.grid.minor.y=element_blank(),

axis.text.y=element_text(size=20,color="red"),

axis.title.y=element_text(size=20))

g1<-ggplot_gtable(ggplot_build(phenoPlot_2))

g2<-ggplot_gtable(ggplot_build(tempPlot_dayMax_corr_2))

pp<-c(subset(g1$layout,name=="panel",se=t:r))

g<-gtable_add_grob(g1, g2$grobs[[which(g2$layout$name=="panel")]],pp$t,pp$l,pp$b,pp$l)

ia<-which(g2$layout$name=="axis-l")

ga <- g2$grobs[[ia]]

ax <- ga$children[[2]]

ax$widths <- rev(ax$widths)

ax$grobs <- rev(ax$grobs)

ax$grobs[[1]]$x <- ax$grobs[[1]]$x - unit(1, "npc") + unit(0.15, "cm")

g <- gtable_add_cols(g, g2$widths[g2$layout[ia, ]$l], length(g$widths) - 1)

g <- gtable_add_grob(g, ax, pp$t, length(g$widths) - 1, pp$b)

grid.draw(g)

# Plot 2: Both pheno data and temp data as line graphs

grid.newpage()

phenoPlot_3 <- ggplot(phe_1sp_2018, aes(dateStat, countYes)) +

geom_line(na.rm = TRUE) +

scale_x_date(breaks = date_breaks("months"), labels = date_format("%b")) +

ggtitle("Total Individuals in Leaf vs. Temp (C)") +

xlab("Date") + ylab("Number of Individuals") +

theme_bw()+

theme(legend.justification=c(0,1),

legend.position=c(0,1),

plot.title=element_text(size=25,vjust=1),

axis.text.x=element_text(size=20),

axis.text.y=element_text(size=20),

axis.title.x=element_text(size=20),

axis.title.y=element_text(size=20))

tempPlot_dayMax_corr_3 <- ggplot() +

geom_line(data = temp_day_filt, aes(Date, dayMax),color="red") +

scale_x_date(breaks = date_breaks("months"), labels = date_format("%b")) +

xlab("") + ylab("Temp (C)") +

theme_bw() %+replace%

theme(panel.background = element_rect(fill = NA),

panel.grid.major.x=element_blank(),

panel.grid.minor.x=element_blank(),

panel.grid.major.y=element_blank(),

panel.grid.minor.y=element_blank(),

axis.text.y=element_text(size=20,color="red"),

axis.title.y=element_text(size=20))

g1<-ggplot_gtable(ggplot_build(phenoPlot_3))

g2<-ggplot_gtable(ggplot_build(tempPlot_dayMax_corr_3))

pp<-c(subset(g1$layout,name=="panel",se=t:r))

g<-gtable_add_grob(g1, g2$grobs[[which(g2$layout$name=="panel")]],pp$t,pp$l,pp$b,pp$l)

ia<-which(g2$layout$name=="axis-l")

ga <- g2$grobs[[ia]]

ax <- ga$children[[2]]

ax$widths <- rev(ax$widths)

ax$grobs <- rev(ax$grobs)

ax$grobs[[1]]$x <- ax$grobs[[1]]$x - unit(1, "npc") + unit(0.15, "cm")

g <- gtable_add_cols(g, g2$widths[g2$layout[ia, ]$l], length(g$widths) - 1)

g <- gtable_add_grob(g, ax, pp$t, length(g$widths) - 1, pp$b)

grid.draw(g)