Workshop

A Hands-on Primer for Working with Big Data in R: Introduction to Common Formats & Efficient Data Visualization | ESA 2014

Ecological Society of America Annual Meeting

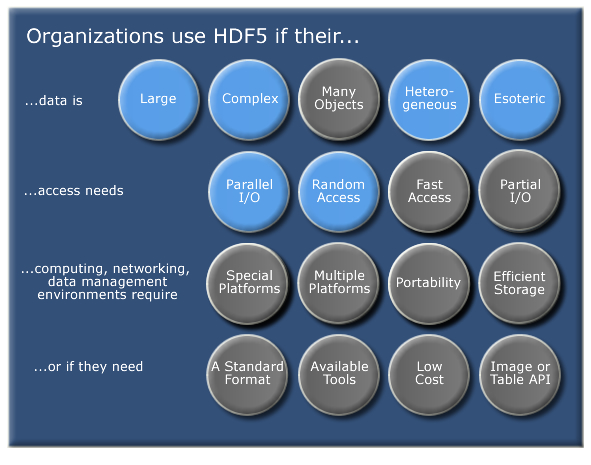

Ecologists working across scales and integrating disparate datasets face new challenges to data management and analysis that demand toolkits that go above and beyond the spreadsheet. This workshop will offer ecologists an overview of the variety of data formats and types that are typically encountered when working with ‘Big Data’, and an introduction to available tools in R for working with these formats. The first half of the workshop will introduce participants to these formats, including ASCII, NetCDF4, HDF5, and las.

Participants will then learn to (1) access and visualize large datasets in these formats using R, and (2) to use metadata to efficiently integrate datasets from multiple ecological data sources for analysis. In the second half of the workshop, we will apply the knowledge from the first half to work through a practical example of how to integrate field-collected vegetation structure data with remotely sensed LiDAR data. For this example, we will use data collected by the National Ecological Observatory Network (NEON), a continental-scale, NSF-funded effort to collect and freely serve terabytes of data per year (stored in a diversity of formats) over the next 30 years to enable ecological research. Participants will therefore leave the workshop with a basic understanding of the data that NEON and other large projects offer and some basic tools that support the use of Big Data to enhance their own research.

Workshop Instructors

- Ted Hart

- Leah A. Wasser

- Sarah Elmendorf

- Kate Thibault

Schedule

| Time | Topic |

|---|---|

| 12:00 | Welcome, Intro to NEON Data |

| 12:35 | HDF5 Formats |

| 1:30 | BREAK |

| 1:50 | Data Visualization |

| 2:50 | BREAK |

| 3:10 | Bringing It Together: Visualization & HDF5 metadata |

| 5:00 | End |

Hierarchical Data Formats - What is HDF5?

Learning Objectives

After completing this tutorial, you will be able to:

- Explain what the Hierarchical Data Format (HDF5) is.

- Describe the key benefits of the HDF5 format, particularly related to big data.

- Describe both the types of data that can be stored in HDF5 and how it can be stored/structured.

About Hierarchical Data Formats - HDF5

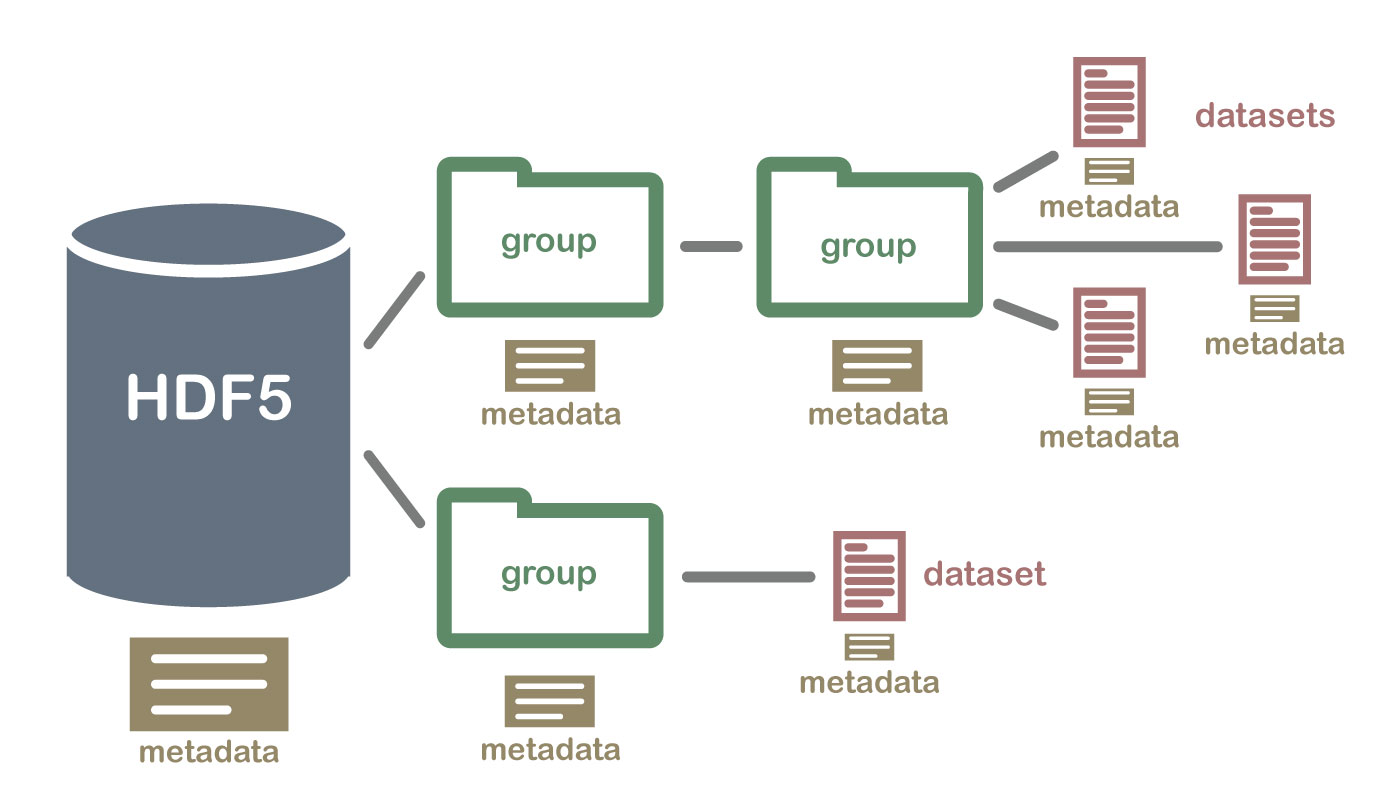

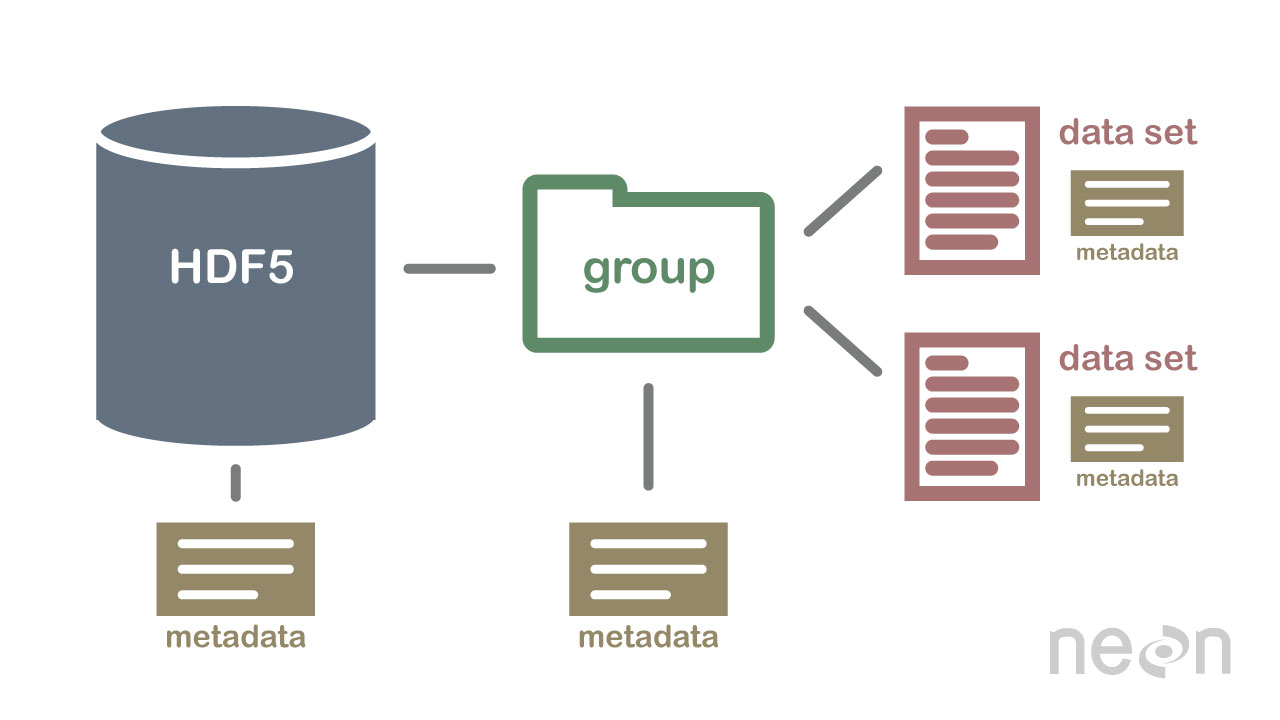

The Hierarchical Data Format version 5 (HDF5), is an open source file format that supports large, complex, heterogeneous data. HDF5 uses a "file directory" like structure that allows you to organize data within the file in many different structured ways, as you might do with files on your computer. The HDF5 format also allows for embedding of metadata making it self-describing.

Hierarchical Structure - A file directory within a file

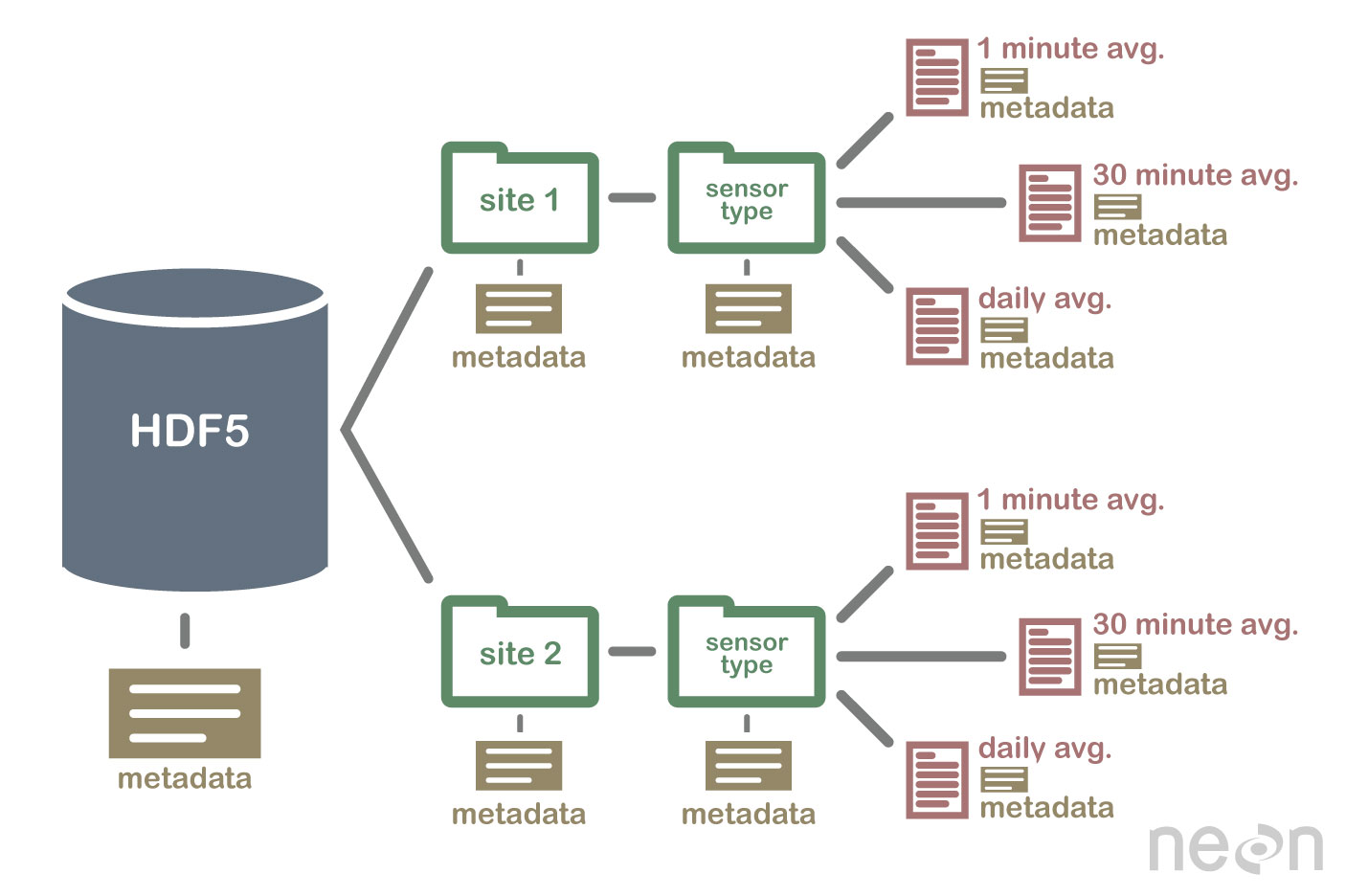

The HDF5 format can be thought of as a file system contained and described

within one single file. Think about the files and folders stored on your computer.

You might have a data directory with some temperature data for multiple field

sites. These temperature data are collected every minute and summarized on an

hourly, daily and weekly basis. Within one HDF5 file, you can store a similar

set of data organized in the same way that you might organize files and folders

on your computer. However in a HDF5 file, what we call "directories" or "folders"

on our computers, are called groups and what we call files on our

computer are called datasets.

2 Important HDF5 Terms

- Group: A folder like element within an HDF5 file that might contain other groups OR datasets within it.

- Dataset: The actual data contained within the HDF5 file. Datasets are often (but don't have to be) stored within groups in the file.

An HDF5 file containing datasets, might be structured like this:

HDF5 is a Self Describing Format

HDF5 format is self describing. This means that each file, group and dataset can have associated metadata that describes exactly what the data are. Following the example above, we can embed information about each site to the file, such as:

- The full name and X,Y location of the site

- Description of the site.

- Any documentation of interest.

Similarly, we might add information about how the data in the dataset were collected, such as descriptions of the sensor used to collect the temperature data. We can also attach information, to each dataset within the site group, about how the averaging was performed and over what time period data are available.

One key benefit of having metadata that are attached to each file, group and

dataset, is that this facilitates automation without the need for a separate

(and additional) metadata document. Using a programming language, like R or

Python, we can grab information from the metadata that are already associated

with the dataset, and which we might need to process the dataset.

Compressed & Efficient subsetting

The HDF5 format is a compressed format. The size of all data contained within

HDF5 is optimized which makes the overall file size smaller. Even when

compressed, however, HDF5 files often contain big data and can thus still be

quite large. A powerful attribute of HDF5 is data slicing, by which a

particular subsets of a dataset can be extracted for processing. This means that

the entire dataset doesn't have to be read into memory (RAM); very helpful in

allowing us to more efficiently work with very large (gigabytes or more) datasets!

Heterogeneous Data Storage

HDF5 files can store many different types of data within in the same file. For example, one group may contain a set of datasets to contain integer (numeric) and text (string) data. Or, one dataset can contain heterogeneous data types (e.g., both text and numeric data in one dataset). This means that HDF5 can store any of the following (and more) in one file:

- Temperature, precipitation and PAR (photosynthetic active radiation) data for a site or for many sites

- A set of images that cover one or more areas (each image can have specific spatial information associated with it - all in the same file)

- A multi or hyperspectral spatial dataset that contains hundreds of bands.

- Field data for several sites characterizing insects, mammals, vegetation and climate.

- A set of images that cover one or more areas (each image can have unique spatial information associated with it)

- And much more!

Open Format

The HDF5 format is open and free to use. The supporting libraries (and a free

viewer), can be downloaded from the

HDF Group

website. As such, HDF5 is widely supported in a host of programs, including

open source programming languages like R and Python, and commercial

programming tools like Matlab and IDL. Spatial data that are stored in HDF5

format can be used in GIS and imaging programs including QGIS, ArcGIS, and

ENVI.

Summary Points - Benefits of HDF5

- Self-Describing The datasets with an HDF5 file are self describing. This allows us to efficiently extract metadata without needing an additional metadata document.

- Supporta Heterogeneous Data: Different types of datasets can be contained within one HDF5 file.

- Supports Large, Complex Data: HDF5 is a compressed format that is designed to support large, heterogeneous, and complex datasets.

- Supports Data Slicing: "Data slicing", or extracting portions of the dataset as needed for analysis, means large files don't need to be completely read into the computers memory or RAM.

-

Open Format - wide support in the many tools: Because the HDF5 format is

open, it is supported by a host of programming languages and tools, including

open source languages like R and

Pythonand open GIS tools likeQGIS.

HDFView: Exploring HDF5 Files in the Free HDFview Tool

In this tutorial you will use the free HDFView tool to explore HDF5 files and the groups and datasets contained within. You will also see how HDF5 files can be structured and explore metadata using both spatial and temporal data stored in HDF5!

Learning Objectives

After completing this activity, you will be able to:

- Explain how data can be structured and stored in HDF5.

- Navigate to metadata in an HDF5 file, making it "self describing".

- Explore HDF5 files using the free HDFView application.

Tools You Will Need

Install the free HDFView application. This application allows you to explore the contents of an HDF5 file easily. Click here to go to the download page.

Data to Download

NOTE: The first file downloaded has an .HDF5 file extension, the second file downloaded below has an .h5 extension. Both extensions represent the HDF5 data type.

NEON Teaching Data Subset: Sample Tower Temperature - HDF5

These temperature data were collected by the National Ecological Observatory Network's flux towers at field sites across the US. The entire dataset can be accessed by request from the NEON Data Portal.

Download DatasetDownload NEON Teaching Data Subset: Imaging Spectrometer Data - HDF5

These hyperspectral remote sensing data provide information on the National Ecological Observatory Network's San Joaquin Exerimental Range field site. The data were collected over the San Joaquin field site located in California (Domain 17) and processed at NEON headquarters. The entire dataset can be accessed by request from the NEON Data Portal.

Download DatasetInstalling HDFView

Select the HDFView download option that matches the operating system (Mac OS X, Windows, or Linux) and computer setup (32 bit vs 64 bit) that you have.

This tutorial was written with graphics from the VS 2012 version, but it is applicable to other versions as well.

Hierarchical Data Format 5 - HDF5

Hierarchical Data Format version 5 (HDF5), is an open file format that supports large, complex, heterogeneous data. Some key points about HDF5:

- HDF5 uses a "file directory" like structure.

- The HDF5 data models organizes information using

Groups. Each group may contain one or moredatasets. - HDF5 is a self describing file format. This means that the metadata for the data contained within the HDF5 file, are built into the file itself.

- One HDF5 file may contain several heterogeneous data types (e.g. images, numeric data, data stored as strings).

For more introduction to the HDF5 format, see our About Hierarchical Data Formats - What is HDF5? tutorial.

In this tutorial, we will explore two different types of data saved in HDF5. This will allow us to better understand how one file can store multiple different types of data, in different ways.

Part 1: Exploring Temperature Data in HDF5 Format in HDFView

The first thing that we will do is open an HDF5 file in the viewer to get a better idea of how HDF5 files can be structured.

Open a HDF5/H5 file in HDFView

To begin, open the HDFView application.

Within the HDFView application, select File --> Open and navigate to the folder

where you saved the NEONDSTowerTemperatureData.hdf5 file on your computer. Open this file in HDFView.

If you click on the name of the HDF5 file in the left hand window of HDFView, you can view metadata for the file. This will be located in the bottom window of the application.

Explore File Structure in HDFView

Next, explore the structure of this file. Notice that there are two Groups (represented as folder icons in the viewer) called "Domain_03" and "Domain_10". Within each domain group, there are site groups (NEON sites that are located within those domains). Expand these folders by double clicking on the folder icons. Double clicking expands the groups content just as you might expand a folder in Windows explorer.

Notice that there is metadata associated with each group.

Double click on the OSBS group located within the Domain_03 group. Notice in

the metadata window that OSBS contains data collected from the

NEON Ordway-Swisher Biological Station field site.

Within the OSBS group there are two more groups - Min_1 and Min_30. What data

are contained within these groups?

Expand the "min_1" group within the OSBS site in Domain_03. Notice that there are five more nested groups named "Boom_1, 2, etc". A boom refers to an arm on a tower, which sits at a particular height and to which are attached sensors for collecting data on such variables as temperature, wind speed, precipitation, etc. In this case, we are working with data collected using temperature sensors, mounted on the tower booms.

Speaking of temperature - what type of sensor is collected the data within the boom_1 folder at the Ordway Swisher site? HINT: check the metadata for that dataset.

Expand the "Boom_1" folder by double clicking it. Finally, we have arrived at a dataset! Have a look at the metadata associated with the temperature dataset within the boom_1 group. Notice that there is metadata describing each attribute in the temperature dataset. Double click on the group name to open up the table in a tabular format. Notice that these data are temporal.

So this is one example of how an HDF5 file could be structured. This particular file contains data from multiple sites, collected from different sensors (mounted on different booms on the tower) and collected over time. Take some time to explore this HDF5 dataset within the HDFViewer.

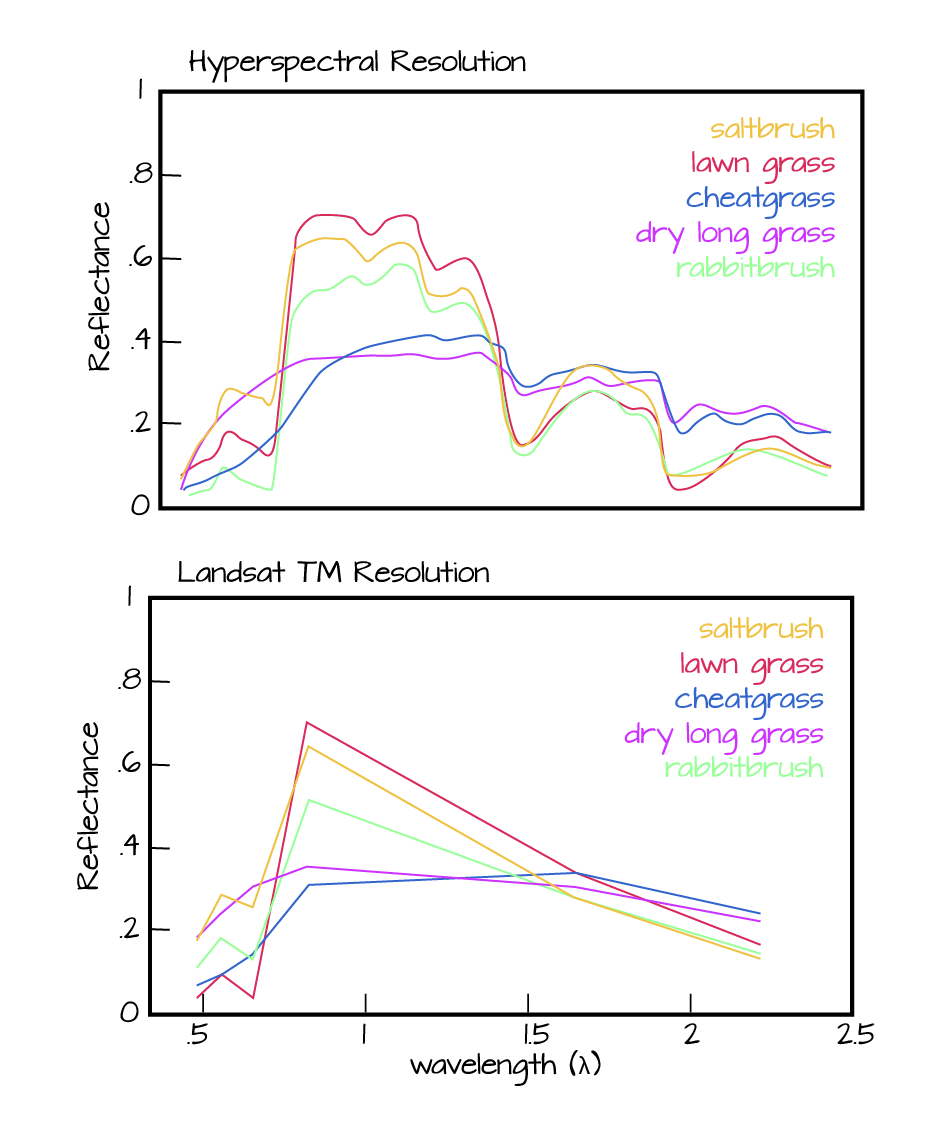

Part 2: Exploring Hyperspectral Imagery stored in HDF5

Next, we will explore a hyperspectral dataset, collected by the NEON Airborne Observation Platform (AOP) and saved in HDF5 format. Hyperspectral data are naturally hierarchical, as each pixel in the dataset contains reflectance values for hundreds of bands collected by the sensor. The NEON sensor (imaging spectrometer) collected data within 428 bands.

A few notes about hyperspectral imagery:

- An imaging spectrometer, which collects hyperspectral imagery, records light energy reflected off objects on the earth's surface.

- The data are inherently spatial. Each "pixel" in the image is located spatially and represents an area of ground on the earth.

- Similar to an Red, Green, Blue (RGB) camera, an imaging spectrometer records reflected light energy. Each pixel will contain several hundred bands worth of reflectance data.

Read more about hyperspectral remote sensing data:

Let's open some hyperspectral imagery stored in HDF5 format to see what the file structure can like for a different type of data.

Open the file. Notice that it is structured differently. This file is composed of 3 datasets:

- Reflectance,

- fwhm, and

- wavelength.

It also contains some text information called "map info". Finally it contains a group called spatial info.

Let's first look at the metadata stored in the spatialinfo group. This group contains all of the spatial information that a GIS program would need to project the data spatially.

Next, double click on the wavelength dataset. Note that this dataset contains the central wavelength value for each band in the dataset.

Finally, click on the reflectance dataset. Note that in the metadata for the dataset that the structure of the dataset is 426 x 501 x 477 (wavelength, line, sample), as indicated in the metadata. Right click on the reflectance dataset and select Open As. Click Image in the "display as" settings on the left hand side of the popup.

In this case, the image data are in the second and third dimensions of this dataset. However, HDFView will default to selecting the first and second dimensions

Let’s tell the HDFViewer to use the second and third dimensions to view the image:

- Under

height, make suredim 1is selected. - Under

width, make suredim 2is selected.

Notice an image preview appears on the left of the pop-up window. Click OK to open the image. You may have to play with the brightness and contrast settings in the viewer to see the data properly.

Explore the spectral dataset in the HDFViewer taking note of the metadata and data stored within the file.

Introduction to HDF5 Files in R

Learning Objectives

After completing this tutorial, you will be able to:

- Understand how HDF5 files can be created and structured in R using the rhdf5 libraries.

- Understand the three key HDF5 elements: the HDF5 file itself, groups, and datasets.

- Understand how to add and read attributes from an HDF5 file.

Things You’ll Need To Complete This Tutorial

To complete this tutorial you will need the most current version of R and, preferably, RStudio loaded on your computer.

R Libraries to Install:

- rhdf5: The rhdf5 package is hosted on Bioconductor not CRAN. Directions for installation are in the first code chunk.

More on Packages in R – Adapted from Software Carpentry.

Data to Download

We will use the file below in the optional challenge activity at the end of this tutorial.

NEON Teaching Data Subset: Field Site Spatial Data

These remote sensing data files provide information on the vegetation at the National Ecological Observatory Network's San Joaquin Experimental Range and Soaproot Saddle field sites. The entire dataset can be accessed by request from the NEON Data Portal.

Download DatasetSet Working Directory: This lesson assumes that you have set your working directory to the location of the downloaded and unzipped data subsets.

An overview of setting the working directory in R can be found here.

R Script & Challenge Code: NEON data lessons often contain challenges that reinforce learned skills. If available, the code for challenge solutions is found in the downloadable R script of the entire lesson, available in the footer of each lesson page.

Additional Resources

Consider reviewing the documentation for the RHDF5 package.

About HDF5

The HDF5 file can store large, heterogeneous datasets that include metadata. It

also supports efficient data slicing, or extraction of particular subsets of a

dataset which means that you don't have to read large files read into the

computers memory / RAM in their entirety in order work with them.

HDF5 in R

To access HDF5 files in R, we will use the rhdf5 library which is part of

the Bioconductor

suite of R libraries. It might also be useful to install

the

free HDF5 viewer

which will allow you to explore the contents of an HDF5 file using a graphic interface.

More about working with HDFview and a hands-on activity here.

First, let's get R setup. We will use the rhdf5 library. To access HDF5 files in R, we will use the rhdf5 library which is part of the Bioconductor suite of R packages. As of May 2020 this package was not yet on CRAN.

# Install rhdf5 package (only need to run if not already installed)

#install.packages("BiocManager")

#BiocManager::install("rhdf5")

# Call the R HDF5 Library

library("rhdf5")

# set working directory to ensure R can find the file we wish to import and where

# we want to save our files

wd <- "~/Git/data/" #This will depend on your local environment

setwd(wd)

Read more about the

rhdf5 package here.

Create an HDF5 File in R

Now, let's create a basic H5 file with one group and one dataset in it.

# Create hdf5 file

h5createFile("vegData.h5")

## [1] TRUE

# create a group called aNEONSite within the H5 file

h5createGroup("vegData.h5", "aNEONSite")

## [1] TRUE

# view the structure of the h5 we've created

h5ls("vegData.h5")

## group name otype dclass dim

## 0 / aNEONSite H5I_GROUP

Next, let's create some dummy data to add to our H5 file.

# create some sample, numeric data

a <- rnorm(n=40, m=1, sd=1)

someData <- matrix(a,nrow=20,ncol=2)

Write the sample data to the H5 file.

# add some sample data to the H5 file located in the aNEONSite group

# we'll call the dataset "temperature"

h5write(someData, file = "vegData.h5", name="aNEONSite/temperature")

# let's check out the H5 structure again

h5ls("vegData.h5")

## group name otype dclass dim

## 0 / aNEONSite H5I_GROUP

## 1 /aNEONSite temperature H5I_DATASET FLOAT 20 x 2

View a "dump" of the entire HDF5 file. NOTE: use this command with CAUTION if you are working with larger datasets!

# we can look at everything too

# but be cautious using this command!

h5dump("vegData.h5")

## $aNEONSite

## $aNEONSite$temperature

## [,1] [,2]

## [1,] 0.33155432 2.4054446

## [2,] 1.14305151 1.3329978

## [3,] -0.57253964 0.5915846

## [4,] 2.82950139 0.4669748

## [5,] 0.47549005 1.5871517

## [6,] -0.04144519 1.9470377

## [7,] 0.63300177 1.9532294

## [8,] -0.08666231 0.6942748

## [9,] -0.90739256 3.7809783

## [10,] 1.84223101 1.3364965

## [11,] 2.04727590 1.8736805

## [12,] 0.33825921 3.4941913

## [13,] 1.80738042 0.5766373

## [14,] 1.26130759 2.2307994

## [15,] 0.52882731 1.6021497

## [16,] 1.59861449 0.8514808

## [17,] 1.42037674 1.0989390

## [18,] -0.65366487 2.5783750

## [19,] 1.74865593 1.6069304

## [20,] -0.38986048 -1.9471878

# Close the file. This is good practice.

H5close()

Add Metadata (attributes)

Let's add some metadata (called attributes in HDF5 land) to our dummy temperature data. First, open up the file.

# open the file, create a class

fid <- H5Fopen("vegData.h5")

# open up the dataset to add attributes to, as a class

did <- H5Dopen(fid, "aNEONSite/temperature")

# Provide the NAME and the ATTR (what the attribute says) for the attribute.

h5writeAttribute(did, attr="Here is a description of the data",

name="Description")

h5writeAttribute(did, attr="Meters",

name="Units")

Now we can add some attributes to the file.

# let's add some attributes to the group

did2 <- H5Gopen(fid, "aNEONSite/")

h5writeAttribute(did2, attr="San Joaquin Experimental Range",

name="SiteName")

h5writeAttribute(did2, attr="Southern California",

name="Location")

# close the files, groups and the dataset when you're done writing to them!

H5Dclose(did)

H5Gclose(did2)

H5Fclose(fid)

Working with an HDF5 File in R

Now that we've created our H5 file, let's use it! First, let's have a look at the attributes of the dataset and group in the file.

# look at the attributes of the precip_data dataset

h5readAttributes(file = "vegData.h5",

name = "aNEONSite/temperature")

## $Description

## [1] "Here is a description of the data"

##

## $Units

## [1] "Meters"

# look at the attributes of the aNEONsite group

h5readAttributes(file = "vegData.h5",

name = "aNEONSite")

## $Location

## [1] "Southern California"

##

## $SiteName

## [1] "San Joaquin Experimental Range"

# let's grab some data from the H5 file

testSubset <- h5read(file = "vegData.h5",

name = "aNEONSite/temperature")

testSubset2 <- h5read(file = "vegData.h5",

name = "aNEONSite/temperature",

index=list(NULL,1))

H5close()

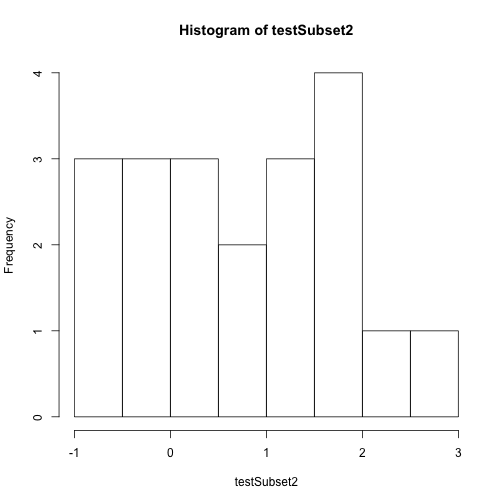

Once we've extracted data from our H5 file, we can work with it in R.

# create a quick plot of the data

hist(testSubset2)

Time to practice the skills you've learned. Open up the D17_2013_SJER_vegStr.csv in R.

- Create a new HDF5 file called

vegStructure. - Add a group in your HDF5 file called

SJER. - Add the veg structure data to that folder.

- Add some attributes the SJER group and to the data.

- Now, repeat the above with the D17_2013_SOAP_vegStr csv.

- Name your second group SOAP

Hint: read.csv() is a good way to read in .csv files.