Data Management

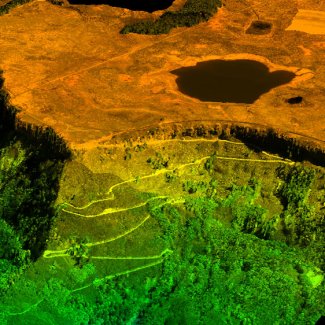

Lidar data showing Grand Mesa

NEON relies on computing software and hardware to manage thousands of sensors, billions of data points, and terabytes of output data. Sensors and technicians collect data from sites spread across the nation. The cyber infrastructure team coordinates the transfer of data from field sites to NEON's central data center.

Working with science and engineering staff, the team 1) standardizes and automates data collection and processing tasks; 2) stores and processes data; and 3) develops relevant operational tools, such as monitoring, alerting, and mobile applications. Special attention is also paid to how data are documented, through human- and machine-readable formats.

Click on any of the topics below, or scroll down to learn more about data storage, software development, and documentation for data interoperability.

Data Availability

Data availability can depend on the expected sampling frequency and timing for any given data product throughout a year, as well as how long it might take for data to go from the point of collection through the data processing pipelines and finally to the portal for use.

Data Formats and Conventions

Careful attention to how data are organized, named, and documented is critical to management and use of NEON data. Learn more about how we format, name, and document data.

Data Quality

In order to test ideas about how ecosystems function or change over time, it is essential to obtain and use data that are fit for the intended analyses. Using good methodologies or well-designed instruments is important, but other measures must also be taken to ensure that data are fit for research.

Data Product Revisions and Releases

Some data products may be revised over the years as methods or technologies improve. We also provide data in a provisional form, and will later release data in final form.

Externally Hosted Data

Numerous NEON data products are hosted at external repositories that best support specialized data, such as surface-atmosphere fluxes of carbon, water, and energy, and DNA sequences.

Data Processing

Providing standardized, quality-assured data products is essential to NEON's mission of providing open data to support greater understanding of complex ecological processes at local, regional and continental scales.

Data Storage

NEON hosts compute infrastructure in its Denver, CO datacenter, Google’s cloud platform, and Amazon Web Services. The data center houses servers, storage, networking, and associated peripherals for the NEON project. NEON uses object storage technologies for primary data storage of Neon data products. Object storage is split between Google’s cloud storage and Amazon’s S3 storage service. Additional compute services are used in Google cloud platform as necessary to support the project.

Software Development

NEON design relies on algorithms and processes to convert raw field measurements and observations into calibrated, documented and quality-controlled data products. Delivering the immense volume of diverse sensor-derived data that NEON collects in a user-friendly format requires large-scale automation and computing power. NEON scientists collaborate with cyber infrastructure staff to create data processing algorithms and frameworks that

- Collect and centralize data from thousands of sensors and hundreds of field scientists;

- Process incoming data to create derived data products;

- Assess the quality and integrity of data products; and

- Deliver optimized, useable, high-value data products.

For example, NEON flags sensor-derived data that are out of normal range or implausible, such as a species size measurement outside of the known range. NEON also conducts random recounts, crosschecks collected data with existing data and reconciles conflicting data using documented quality-control methods.

Approach

NEON uses a collaborative process (Agile software development), with software engineers and scientists partnering together to develop the code that supports data collection, processing, publication, and distribution. Input is gained from multiple sources, including from members of other departments at NEON, external collaborators, and end users. Development projects are scoped, and then prioritized by internal mixed-department teams.

Data Collection

For processes that require people to collect data in the field, NEON scientists and software developers have leveraged the Fulcrum platform to develop a series of sophisticated, rule-based applications tailored to each specific data collection protocol. These custom applications are then served to field scientists on digital tablets, allowing for real time quality assurance of the data during collection.

Learn more about data and sample collection

Data Ingest and Processing

NEON develops and maintains custom software to ingest data from sensors and Fulcrum apps. Streaming data from sensors is continually monitored for issues with data quality and quantity. Potential failure points in the ingest pipeline are logged and validated. Software has also been developed to monitor near-real-time health of sensors at the field sites to facilitate rapid alerts of outages and to improve response time.

NEON developed pipelines to clean and process raw data into higher level products. QA/QC measures are performed at multiple points in the data processing pipeline, as early as possible, as are system state of health and performance. For observational data, scientists produce machine-readable workbooks that describe data processing rules, using an in-house language called NEON Ingest Conversion Language, or nicl. These workbooks provide a flexible method by which processing rules can be updated as needed. For instrumented and AOP data, scientists are involved in developing the algorithms and modules within the processing code.

The data processing algorithms that have been coded into the pipeline are described in detail by Algorithm Theoretical Basis Documents (ATBDs), which are available for download from the NEON Document Library. The processing code is available to the scientific community mostly by request only, but we are working toward open-sourcing our code. Raw (L0) data is never deleted, except in cases of obvious errors with sensors, communications systems, or field collection.

Learn more about data processing

Data Publication

Data publication involves writing processed data into formatted files and bundling the files with associated metadata and documentation into data products. These products are made to the scientific community through NEON's data portal and API. The publication software is written to correctly associate data streams into a bundle, generate metadata files in both human- and machine-readable formats, and store the files in the ECS where they can be accessed later by end users.

Learn more about data publication and releases

User interfaces and API

There are two primary user interfaces to access information about NEON, data, and samples.

The NEON website, https://www.neonscience.org, is a Drupal website that hosts basic content, such as blog posts and this webpage, as well as associated media and documents. Data portal applications are implemented in React / JavaScript. React apps are open source and are developed in parallel with an open source library of core components.

The other primary interface is the Biorepository portal, which is built in Symbiota, a specialized platform for species collection information, and is developed and managed by Biorepository staff. In addition, NEON maintains an Application Programming Interface to assist with programmatic querying of data and metadata.

Documentation and Interoperability

Making data discoverable, interoperable, and ready for reuse requires consideration of many factors, including human- and machine-readable forms of documentation; well-defined naming conventions or unique identifiers for everything from data streams to files; and protocols for transferring information between systems. We develop standardized documentation, some of which is readable by machines.

Interoperability - Naming Conventions and Formats

Where possible, NEON uses existing vocabularies or ontologies to describe variables or data streams. These include Darwin Core terms, the Global Biodiversity Information Facility vocabularies, and the VegCore data dictionary. In addition, data files are formatted to enhance interoperability between NEON data products and with data from other research programs. This includes the use of CSV, HDF5, LAS/LAZ, and GEOTIFF file formats.

Documentation

Human-readable documentation is provided in text and PDF files. Each data product includes README files that describe the data product, as well as any files that are included in a downloaded package. In addition, end users can choose to include PDF files that may describe data collection and sample processing protocols, sensor placement in the field, algorithms used in data processing, calibration procedures, and other components of the data life cycle.

Machine-readable documentation is developed using community standards and established schemas. For NEON, this is mostly through three mechanisms: 1) metadata files generated based on the Ecological Metadata Language (EML) schema, which describe data products and the files that comprise data packages; 2) metadata embedded into the Hierarchical Data Format (HDF5) that NEON uses for eddy covariance and AOP data products; and 3) JSON-LD files that follow schema.org conventions, extended with patterns defined by the Schema.org cluster within the Earth Science Information Partners (ESIP) organization.

Last updated 2023-10-17